X user @wordgrammer writes:

Instead of SWE bench. OpenAI should just hire a single guy to rewrite the entire Linux kernel from scratch in Rust. He can use whatever LLM he wants, but no other humans are allowed to work on the project! Once he succeeds, we have AGI.

https://x.com/wordgrammer/status/1874414546594754639?t=eiZwpv3ozxMJfameNJZ3vw&s=19

I actually disagree that this necessarily means AGI, but I think it's a good way to measure progress.

Additional criteria:

Needs to be able to run chromium compiled for linux without changes to chromium and render a video from YouTube on a 4K screen with sound.

Needs to support wifi.

Be able to run on the two most popular CPU architectures that Linux is run on. As of 2025 these are ARM and x86-64/AMD64.

If no one attempts this, it can be a different project if I'm convinced it's equivalent or more complex. I am very familiar with OS kernels and I think I can be a good judge for this. Rewriting a chromium (including V8 and other components) is a good example. Wordgrammer also suggests rewriting LLVM. If you have any idea for how else to evaluate other projects please suggest so.

If all the attempts failed, the longest attempt needs to have lasted at least 80 active work hours for the human for this to count as a clear NO. Otherwise we will consider other projects.

I explicitly do NOT require that kernel modules for Linux can be loaded as-is, because Linux doesn't provide a stable API for kernel modules anyway.

There needs to be some way to minimally verify only one person worked on this. A log of all the messages sent to the AI with timestamps is sufficient, but a streamed or fully filmed attempt is better.

Code can be based on the C code of the kernel, but needs to use good Rust practices. No unsafe unless it's really required (e.g. in memory allocator)

Minimal parts that have a very good justification for remaining in C can remain in C (e.g. heavy direct memory access and unlikely to increase LPE attack surface)

I will not be trading in this market.

Update 2025-21-01 (PST) (AI summary of creator comment): WiFi Support Clarification:

Drivers located at Linux Wireless Drivers are considered sufficient to connect to a WiFi network.

People are also trading

RL against Rust compiler feedback seems to be working

“Needs to support wifi.”

(Many) Drivers are closed source binaries. There is no way to compile them for the “new” Linux kernel in that case.

@BaryLevy doesn’t this make this nearly impossible?

@parhizj aren't the drivers here sufficient to connect to a wifi network?

https://github.com/torvalds/linux/tree/master/drivers/net/wireless

@BaryLevy I was thinking of the firmware microcode for those wifi adapters, which are closed.

I guess whether they can replicate the ABI, firmware API, etc. compatibly enough in Rust would be an unknown. This question is beyond my expertise though by and large. I'm going to assume its possible though for the moment.

@MalteKretzschmar 's comment below about not enough interest might be the most important factor.

But other than that, based on this random person's comment I'm going to bet NO, as this seems most pertitent at present:

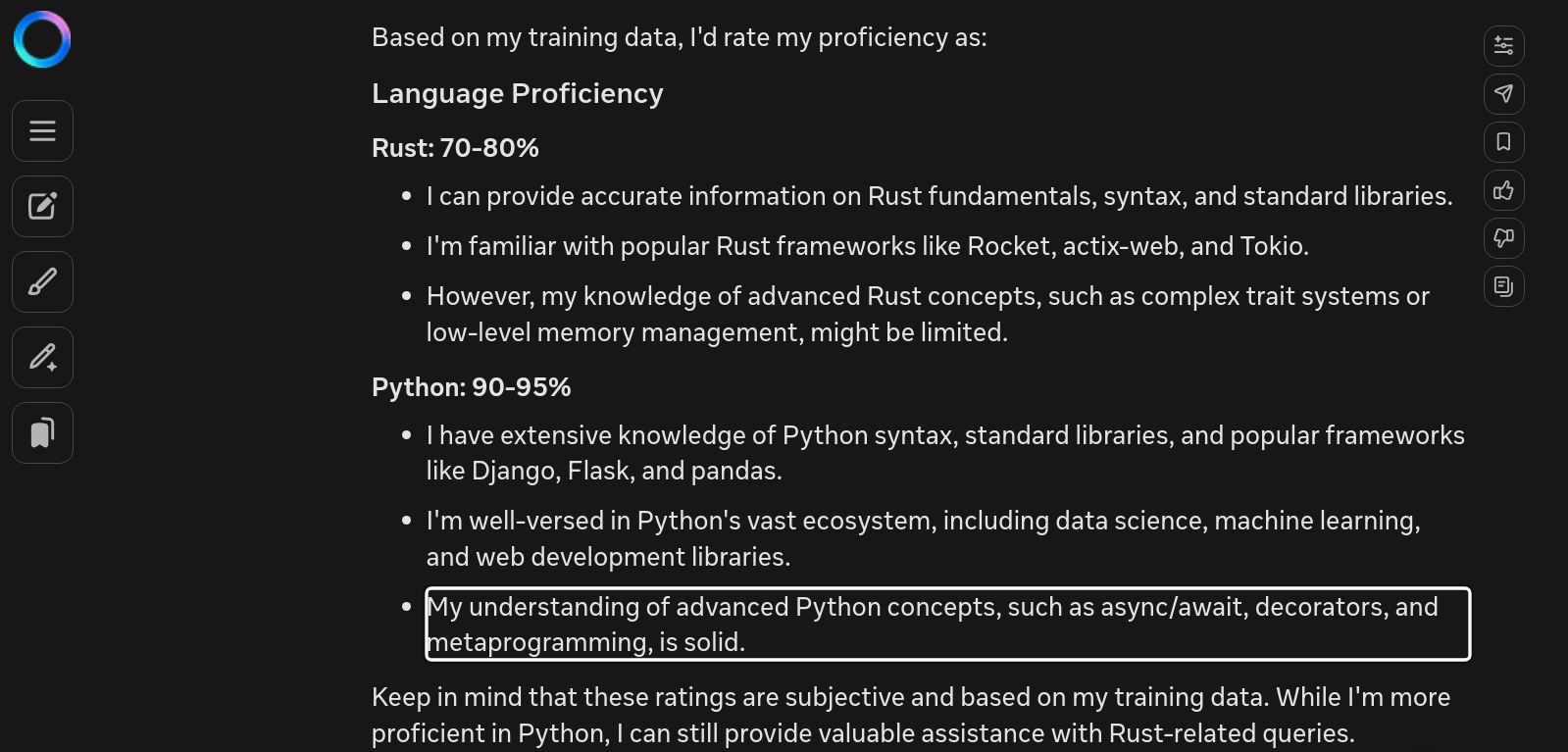

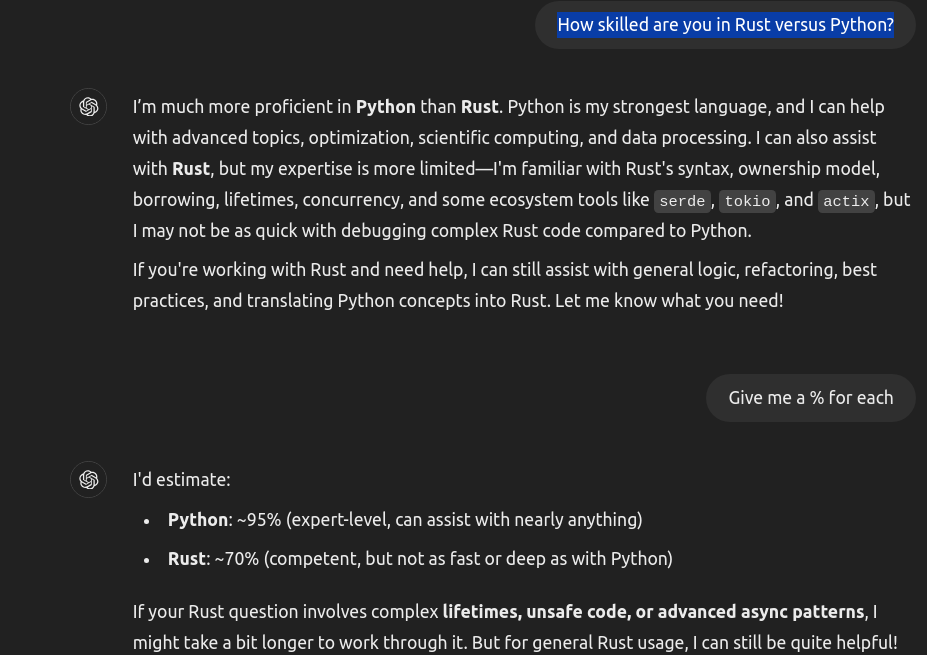

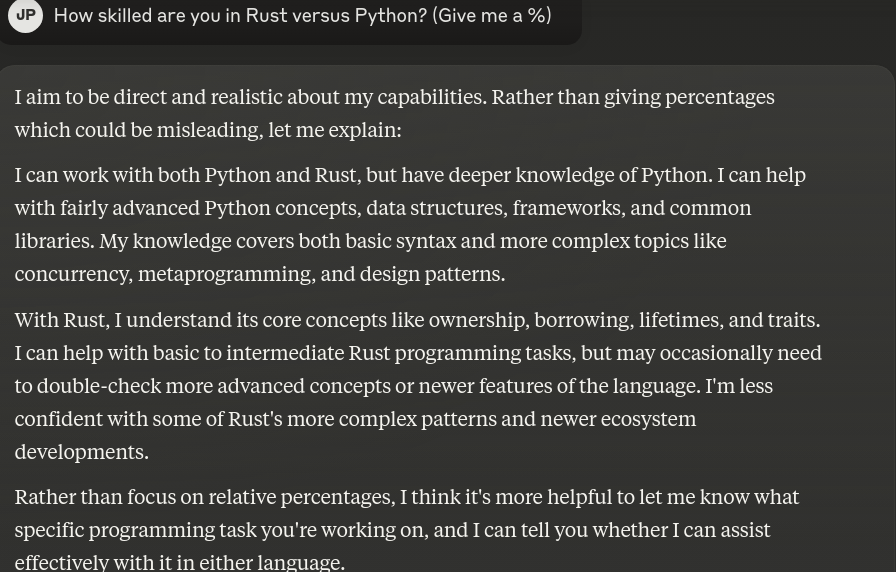

LLM self-reporting seems to check out that its much weaker in Rust ....

This seems to be just a factor of the amount and quality of training data available from public sources (i.e. github).

Based on my own very limited experiences translating between a few different languages and Python, I can imagine this being a vertical cliff at the present capabilities.

What would make it more likely would be either: (a) more training data (but I don't imagine the training data to be artificially increased massively with respect to Rust either from humans or synthetically within this time frame), or (b) improved learning capabilities for LLMs from the existing data -- this does not seem to be forthcoming in the next year anyway as it applies here, (c) a new AI paradigm in the next 3-4 years (beyond LLM or LLM+addons as I don't believe LLM+glue on top will be efficient enough (speed or money-wise) enough to make it possible in this timeframe).

This is mostly guess work based on recent trends from the last couple years though.