An outcome is "okay" if it gets at least 20% of the maximum attainable cosmopolitan value that could've been attained by a positive Singularity (a la full Coherent Extrapolated Volition done correctly), and existing humans don't suffer death or any other awful fates.

This market is a duplicate of https://manifold.markets/IsaacKing/if-we-survive-general-artificial-in with different options. https://manifold.markets/EliezerYudkowsky/if-artificial-general-intelligence-539844cd3ba1?r=RWxpZXplcll1ZGtvd3NreQ is this same question but with user-submitted answers.

(Please note: It's a known cognitive bias that you can make people assign more probability to one bucket over another, by unpacking one bucket into lots of subcategories, but not the other bucket, and asking people to assign probabilities to everything listed. This is the disjunctive dual of the Multiple Stage Fallacy, whereby you can unpack any outcome into a big list of supposedly necessary conjuncts that you ask people to assign probabilities to, and make the final outcome seem very improbable.

So: That famed fiction writer Eliezer Yudkowsky can rationalize at least 15 different stories (options 'A' through 'O') about how things could maybe possibly turn out okay; and that the option texts don't have enough room to list out all the reasons each story is unlikely; and that you get 15 different chances to be mistaken about how plausible each story sounds; does not mean that Reality will be terribly impressed with how disjunctive the okay outcome bucket has been made to sound. Reality need not actually allocate more total probability into all the okayness disjuncts listed, from out of all the disjunctive bad ends and intervening difficulties not detailed here.)

People are also trading

@EliezerYudkowsky People will be arguing which one of these outcomes has occurred after supposed AGI is invented, which will be at a time when none of the outcomes has actually occurred because what we have is not exactly AGI and is not exactly aligned either. People will think wrongly that the critical window has passed and that the threat was overblown.

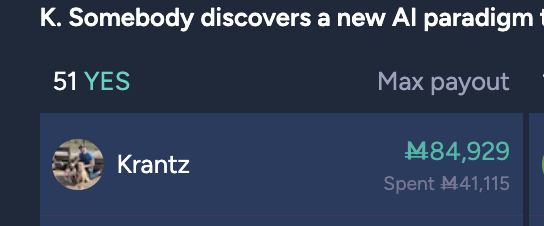

@Krantz want to exit our positions here? i put up a limit right next to market price. you should probably use that money on things that resolve sooner!

I appreciate the offer, but I feel pretty confident that this could resolve soon.

I'd feel more confident with this phrasing though:

Someone discovers a new paradigm in intelligence (collective, not artificial) that's powerful enough and matures fast enough to beat deep learning to the punch, and the new paradigm is much more alignable than giant inscrutable matrices of floating-point numbers.

What I'm hoping we build isn't actually AI, so I might lose for that technicality, but I am confident that it will be the reason for an outcome we might consider 'ok' and that's worth putting money on to me.

In general, I value the survival of my family and friends above winning any of these wagers.

The primary objective for wagering in these markets, for me, is to convey information to intelligent people that have an influence over research.

I try not to do things unless I have reasons to do them.

Fun fact. If my system existed today, you could add the proposition 'Krantz should sell his position in K.' and the CI would then contrast and compare each of our reasons that support or deny that proposition, map them against each other and provide me with the optimal proposition (that exists on your ledger and not mine) that would be most effective in closing the inferential distance between our cruxes.

I bought E from 1% to 8% because maybe the CEV is natural — like, maybe the CEV is roughly hedonium (where "hedonium" is natural/simple and not related to quirks about homo sapiens) and a broad class of superintelligences would prioritize roughly hedonium. Maybe reflective paperclippers actually decide that qualia matter a ton and pursuing-hedonium is convergent. (This is mostly not decision-relevant.)

I am trying to do this differently here : https://manifold.markets/dionisos/if-we-survive-general-artificial-in-z3suausl60

@dionisos good point, G is probably the position of the "there's no such thing as intelligence" crowd

Two questions:

Why is this market suddenly insanely erratic this past week?

Why are so many semi-plausible sounding options being repeatedly bought down to ludicrously low odds <0.5% when you'd expect almost all to be within an order of magnitude of the base rate uniform distribution across options of ~6%?

Some of the options like H seem... logically possible yes, but a bit out there.

As for #2 that's why I bet up E a bit. I find it the most plausible contingent on a slightly superhuman AGI happening within the next 100 years, since it's the only real "it didn't work, but nothing that bad" happened option

this is way too high but it's a keynesian beauty contest

At the time of writing this comment, the interface tells me that if I spend M3 on this answer, it will move it from 0% to 10% (4th place).

I just wanted to check that this is right given that the mana pool is currently around M150k, subsidy pool ~M20k.

(The other version of this question seemed to have a similar issue.)

@MugaSofer Per the inspiration market: 'It resolves to the option that seems closest to the explanation of why we didn't all die. If multiple reasons seem like they all significantly contributed, I may resolve to a mix among them.'