Conjecture is an alignment firm founded by Conor Leahy, also founder(?) of Eleuther AI. They have officially short (5 year) timelines until doom, mostly work on interpretability, generation of new alignment research agendas via their work in Refine and SERI MATS, and convincing ML researchers and their labs that alignment is an important hard problem.

Some have expressed worry that their short timelines and high probability of doom will lead them toward advocating for more risky strategies, making us worse off in >5 year timeline worlds.

This market is, of course, conditional on Conjecture being wrong that we'll all die in 5 years. I'd like to know how much damage they do in that world.

People are also trading

Prediction: compute per dollar improves ~10x every half-decade and no “alignment” ideas will ever matter (as always, stopping Moore’s law is much easier than pseudoreligious nonsense about friendly AI)

Hopefully some of you realize you’re the “mark” in this game: they claim they “want to save the world through X” then they just run a good business.

Nothing wrong with this. And he’s clearly much smarter than Sam Altman, but recognize when he’s just saying crazy things to attract attention—which is what the “saving the world from AGI by running a super profitable AGI-ish business” song and dance is about.

Generally expect Conjecture to be high variance given unilateralist tendencies of founders, and their belief in extremely short timelines. Lots of risky bets I could imagine them choosing to make given this. Still expect it to be net-positive on balance, but 76% sure sounds high, especially given that large swathes of research done by AI safety researchers seems to have plausibly backfired.

Research seems difficult to be net negative, and their field-building is collaborative with other people's models so I wouldn't expect it to be net negative either (especially since Refine is likely ending). The main way it could be net negative is through broader social / community effects of influencing people the wrong way. I don't think they currently have that kind of social power, but plausibly it would increase as progress accelerates and people's timelines get shorter? But then, it seems unlikely for you / the field to be in an epistemic state in 2027 where you'll be able to say "Ah, Conjecture was clearly wrong", as opposed to still being uncertain whether AGI comes in just a year or two more. I'd put it at 80%, I guess.

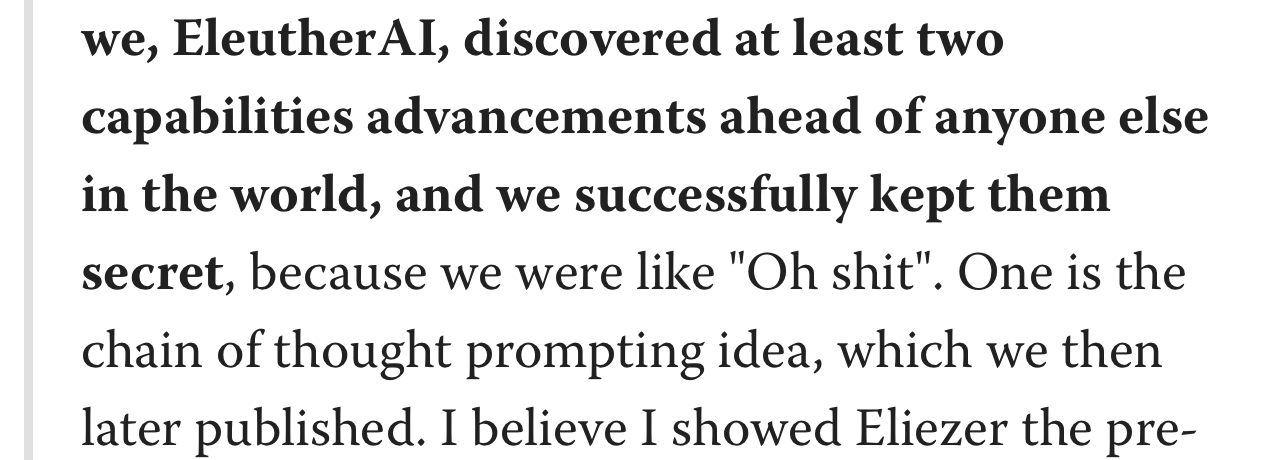

@EliasSchmied Research can straightforwardly be net negative if they end up dramatically increasing capabilities with little to show for alignment insights.

@GarrettBaker Oh yeah, you're right of course. I guess I don't think that's very likely given their infohazard policy.

Also, bringing more attention to AI research in general, I forgot about that too.

@EliasSchmied Also causing commercialization of new technologies (where I think the key bottleneck is usually just demonstrating feasibility).