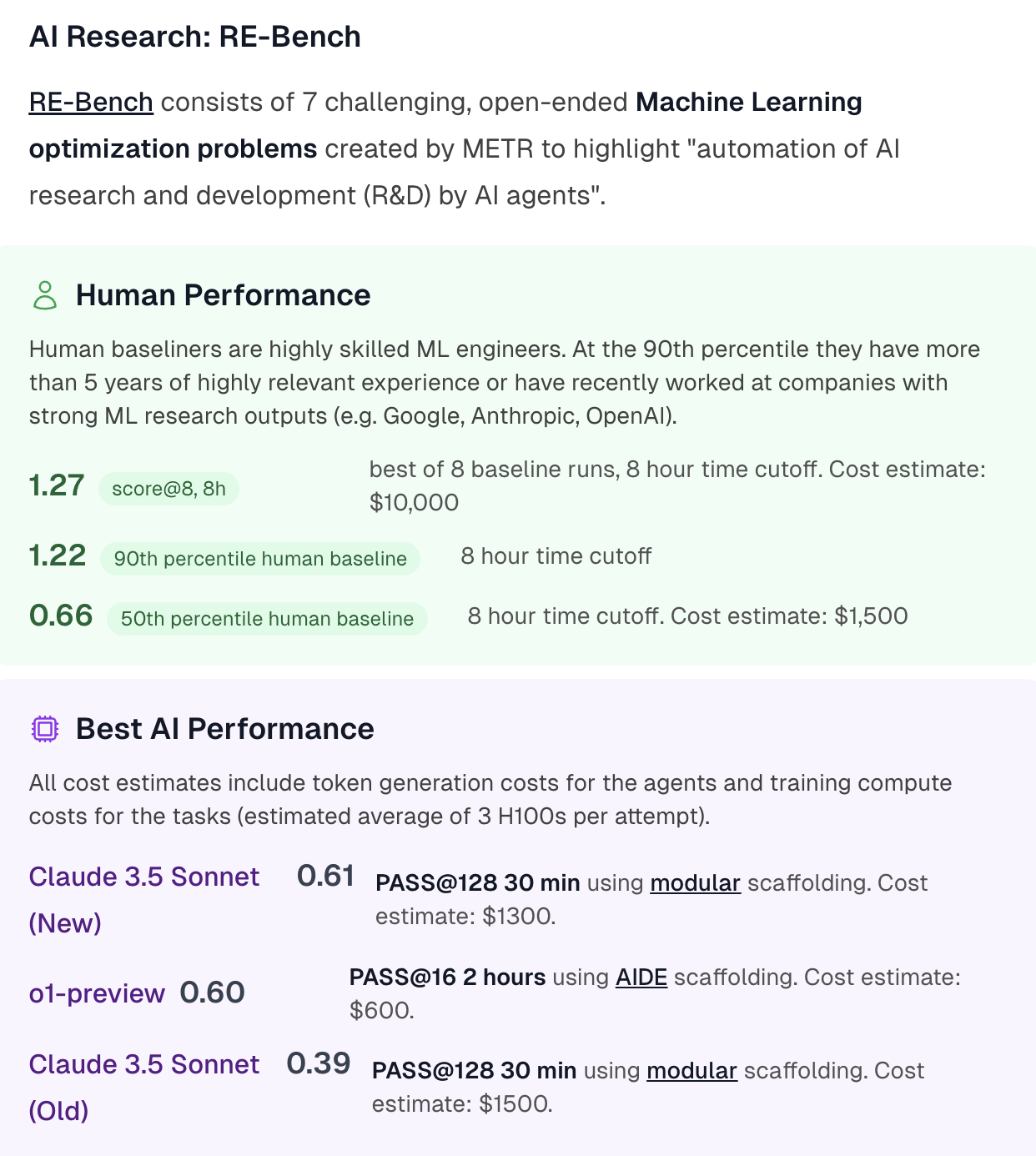

This market matches AI Research: RE-Bench from the AI 2025 Forecasting Survey by AI Digest.

The best performance by an AI system on Re-Bench as of December 31st 2025.

Resolution criteria

This resolution will use AI Digest as its source. If the number reported is exactly on the boundary (eg. 0.80) then the higher choice will be used (ie. 0.8-1.0).

Which AI systems count?

Any AI system counts if it operates within realistic deployment constraints and doesn't have unfair advantages over human baseliners.

Tool assistance, scaffolding, and any other inference-time elicitation techniques are permitted as long as:

There is no systematic unfair advantage over the humans described in the Human Performance section (e.g. AI systems are allowed to have multiple outputs autograded while humans aren't, or AI systems have access to the internet when humans don't).

Having the AI system complete the task does not use more compute than could be purchased with the wages needed to pay a human to complete the same task to the same level

The PASS@k elicitation technique (which automatically grades and chooses the best out of k outputs from a model) is a common example that we do accept on this benchmark because human baseliners in RE-Bench also have access to scoring metrics (e.g. loss/runtime). So PASS@k doesn't constitute a clear unfair advantage.

If there is evidence of training contamination leading to substantially increased performance, scores will be accordingly adjusted or disqualified.

The sweepstakes market for this question has been resolved to partial as we are shutting down sweepstakes. Please read the full announcement here. The mana market will continue as usual.

Only markets closing before March 3rd will be left open for trading and will be resolved as usual.

Users will be able to cashout or donate their entire sweepcash balance, regardless of whether it has been won in a sweepstakes or not, by March 28th (for amounts above our minimum threshold of $25).