Resolves YES if GPT-5 is publicly released before Jan 1, 2025; resolves NO otherwise.

Update from March 18: If the next OpenAI flagship model deviates from the GPT-N naming scheme and there will clearly be no future model named GPT-5 that is going to be released from OpenAI (e.g. they completely changed the trajectory of their naming scheme) then I will count it as "GPT-5" regardless.

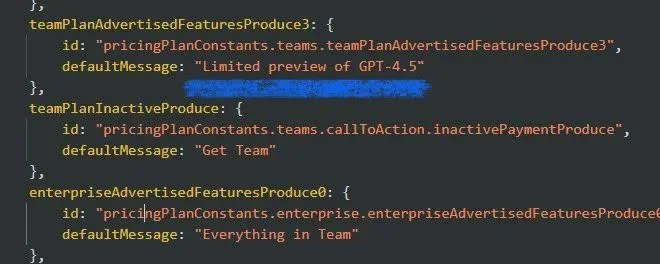

However, if the next flagship model is not called GPT-5 but still conforms to the OpenAI GPT-N naming scheme (e.g. GPT-4.5) or it is implied that there will eventually be a model called GPT-5 that will be released (i.e. they are going to continue with the same naming scheme) then I will not count it as "GPT-5".

Additional update from Manifold staff before sweepifying:

Publicly released includes if it is behind their subscription or a roll-out where some people have access and some are still waiting. If it's publicly released but only for API access this will still resolve YES. Dates for this market are in PST.

@DanielMosmondor They would have to say "there will not be GPT-5", which they haven't said (and seems unlikely for them to say that)

@DanielMosmondor I think 4o is still their flagship model. o1-pro isn't meant for general-purpose questions.

https://x.com/OpenAI/status/1864328928267259941

probably just hype but there's a chance that it may deviate from the GPT-N naming scheme once and for all...

@DogmaticRationalist I think that would only count if they also release a new flagship model, right? They haven't done that yet.

[deleted]

@fxgn Besides the fact that o1 hasn't even been fully released, it's also not meant for general-purpose questions.

@fxgn sorry, got 4o and o1 confused (which probably says enough about o1). Maybe Orion will be something new but I doubt it comes out this year if so.

@fxgn I still think it's NO because IMO "clearly no future gpt-5" by EOY should require them pretty much announcing that there will never be a gpt-5. That being said, there's enough up for debate in the description that I happily forewent the last 7% and exited my position

@DanielMosmondor the fact it doesn't even have a separate name beyond being a sub-version of a sub-version of GPT 4 makes me say it definitely shouldn't count. From what I've heard it's also not significantly better than o1

@ShakedKoplewitz @DanielMosmondor

o1 is part of "a new series of models", not a subversion.

o1 pro isn't a new model, it's a mode for o1 with more compute time. By not new I mean not within the last few days because o1 is decently new.

I still don't think this clearly indicates gpt-5 being dead though. Messy situation lol

@wan Messy indeed. Moreover - will the GPT-5 ever be released most probably won't be known until the end of the year.

@DanielMosmondor yes and because of how the description is worded I definitely think this resolves NO (unless they announce no gpt5). But putting mana in this market again would require me to believe the one resolving this agrees