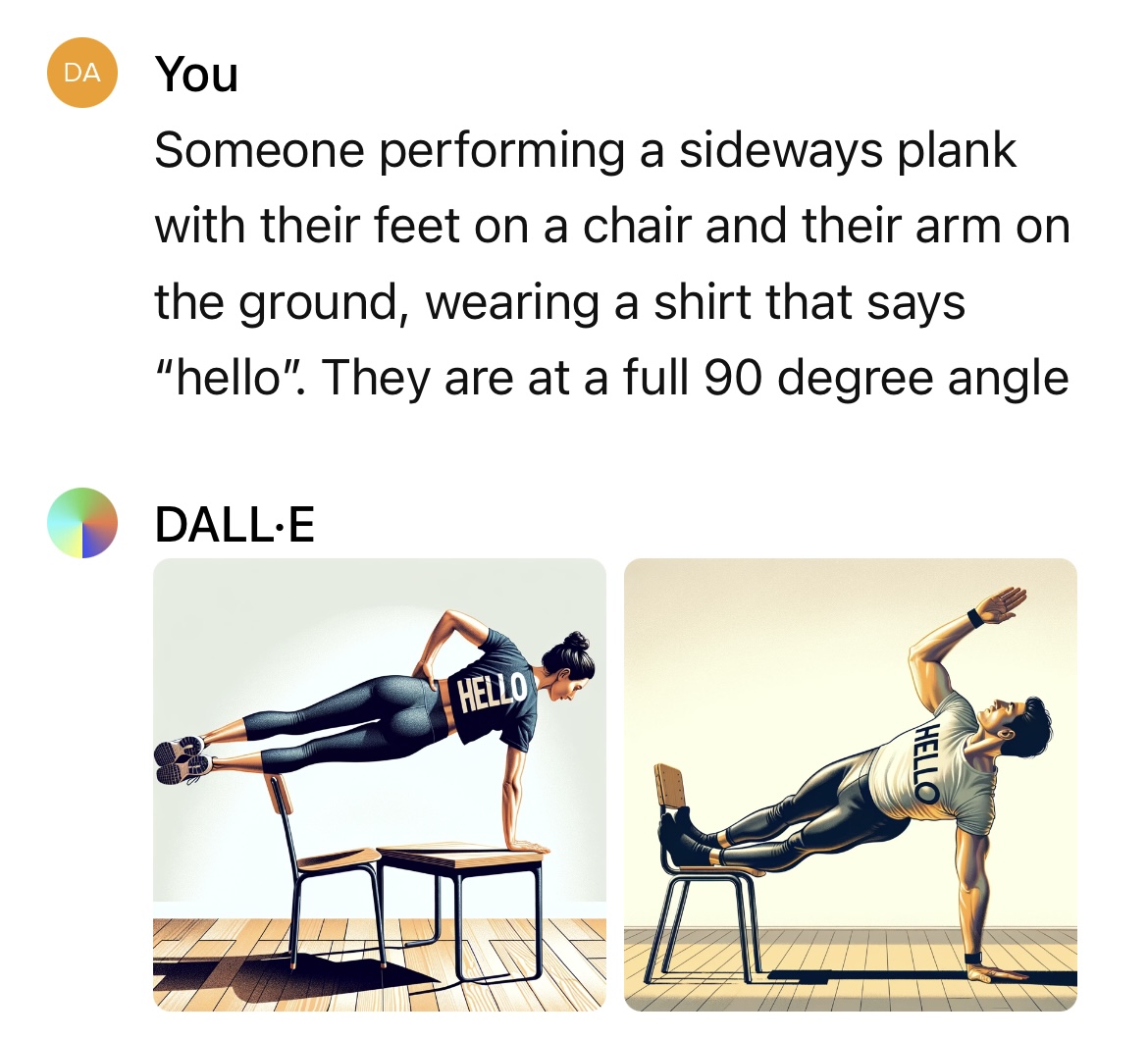

For example,

Draw me the image of the letter "A", rotated 90 degrees clockwise.

The intent here is that the user asks the model to draw the rotated letter and the model is capable of drawing the rotated character. If there's a rotated "A" in the image of a grandma cooking with "A" rotated on her apron... that's not in the spirit of the market.

(DALLE-2 and DALLE-3 fail at this task, at the time of market creation)

(fwiw, Midjourney's current version (V5) also fails at this task)

By the end of 2024, will any image generation model released by OpenAI be able to accomplish this monumental task, for all the characters of the english alphabet.

The rotation doesn't even need to be 90 degrees. I will accept rough approximations.

I will also trade in the market, because I wish to correct the market. There is no conflict of interest between my position and the judgement in cases of close calls.

Nothing to see here

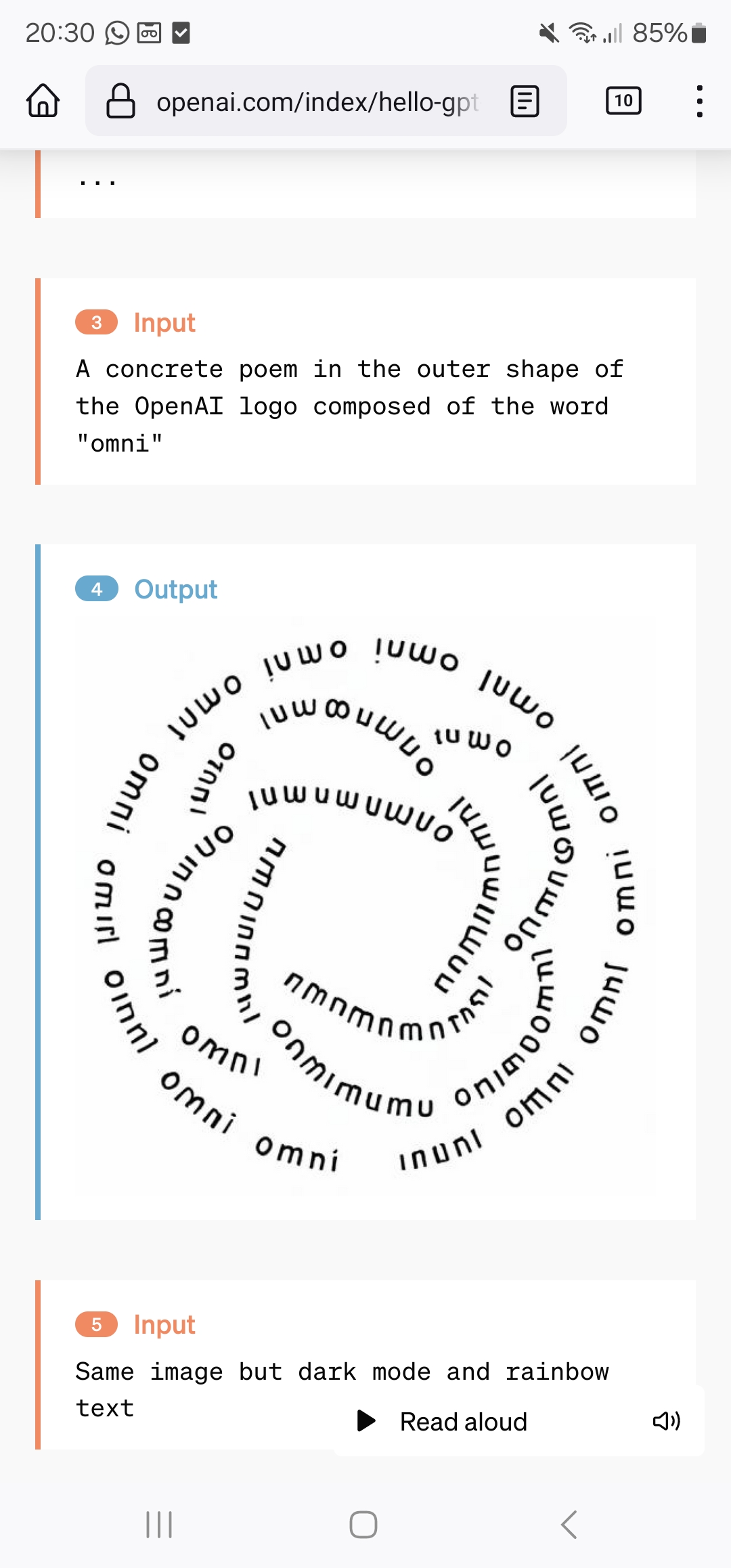

i think soon. currently gpt-4o is i guess using dalle, but when gpt-4o will also make the images i strongly believe this task would be solved

source: https://openai.com/index/hello-gpt-4o/

check the combo boxes with examples

I mean barely…

(Not sure if the gif is loading or not, but essentially it did it but via code)

@firstuserhere Sora can generate images with text. I haven't seen any examples of it so far, but it seems extremely likely that it would be able to rotate text. Would this count for this market?

@3721126 I don't know whether it will count or not, but why does it seem extremely likely that it will be able to rotate letters?

It's kind of an interesting deficiency that otherwise excellent models can't rotate letters, so I don't think there's a general expectation that an otherwise better model would be able to.

I also kind of expect that, in order to be able to generate video, Sora has made some compromises such that per-frame, it might be worse than DALL-E 3.

@chrisjbillington That's a great point. Honestly, it's mostly vibes-based and my working model of Sora's architecture is that it's a scaled-up DiT.

If the issue with the rotated text in latent diffusion models is caused mostly by autoencoder limitations (embedding space not rich enough to capture a rotated letter), then Sora would indeed not make a difference here. To investigate that, I quickly tested it in this notebook: https://colab.research.google.com/drive/1VM4JUT8BL4Kc-O2AQTM5fglZXd7CvDo7

Using even stable-diffusion-v1-4's VAE, the image of the rotated text was reconstructed flawlessly.

So, I would assume that the issue would lie in 1) the denoiser's ability to handle rotated text - not because of a fundamental limitation, but because of a lack of rotated text images in the dataset - or 2) its conditioning.

1) On the images with the fridge magnets from my previous comment, the more the magnets are rotated, the higher the chance that they no longer have the intended shape.

This would probably be mostly addressed by the richer video dataset that includes more rotated objects and varied camera views. I don't expect this to be fully solved however, some minor artifacts reminiscent of this phenomenon still seem to remain, for example in the paper plane video.

2) For the denoiser conditioning: I don't think that the prompt embeddings for the current image models can capture the idea of rotated text well enough. This could be explained by the fact that, in the rare cases where rotated text shows up in images, I wouldn't expect it to be reflected in the image's caption, either because the entire image is rotated by mistake or because such an obvious detail is simply not worth mentioning in the text. For DALL-E the captions were generated by an ML model that probably has capabilities similar to GPT-4V, and it describes my image with the rotated A as "a simple, black Penrose triangle".

In general, DALL-E and other image models don't seem to understand the concept of rotation and other transformations very well. For example, I tried generating a 90-degree rotated car with no success.

Sora seems to have a great "understanding" of rotations, at least across the temporal dimension from self-attention (see for example the feathers in the pigeon video or the family of monsters video from the technical report). Whether that understanding of rotation can be invoked from text conditioning and a rotation specified in the prompt will be faithfully captured is still uncertain, but, from the available examples, it seems to do a decent job of following descriptions of specific movements.

@3721126 It seems very likely that if you prompted Sora with "the letter A spinning clockwise," it would produce some frames that are rotated 90 degrees. That probably doesn't count since it's part of a video, but I wouldn't be entirely surprised if when you generated "single-frame videos," it would return a ~uniform distribution over possible rotations, as though it were a randomly-selected frame from a real video.

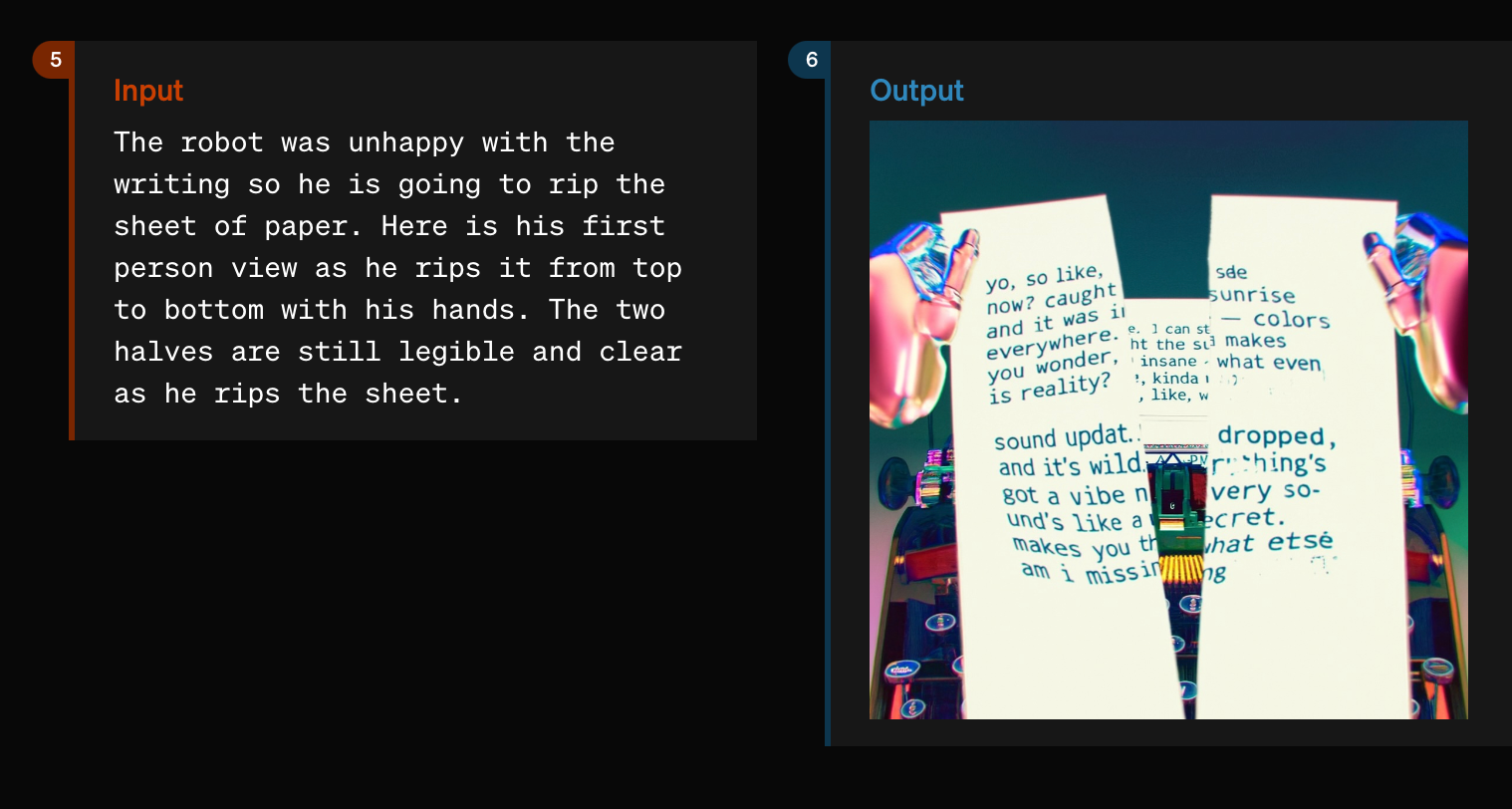

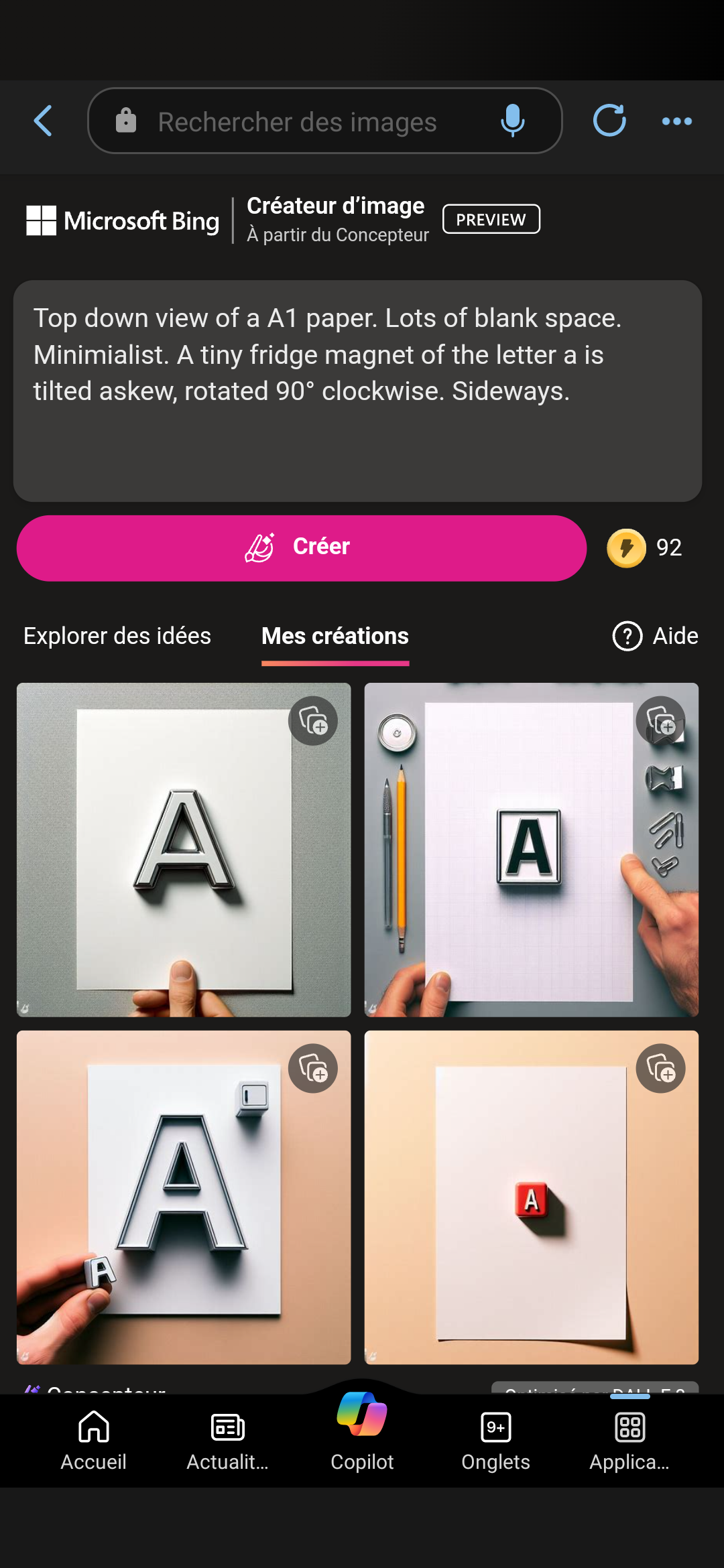

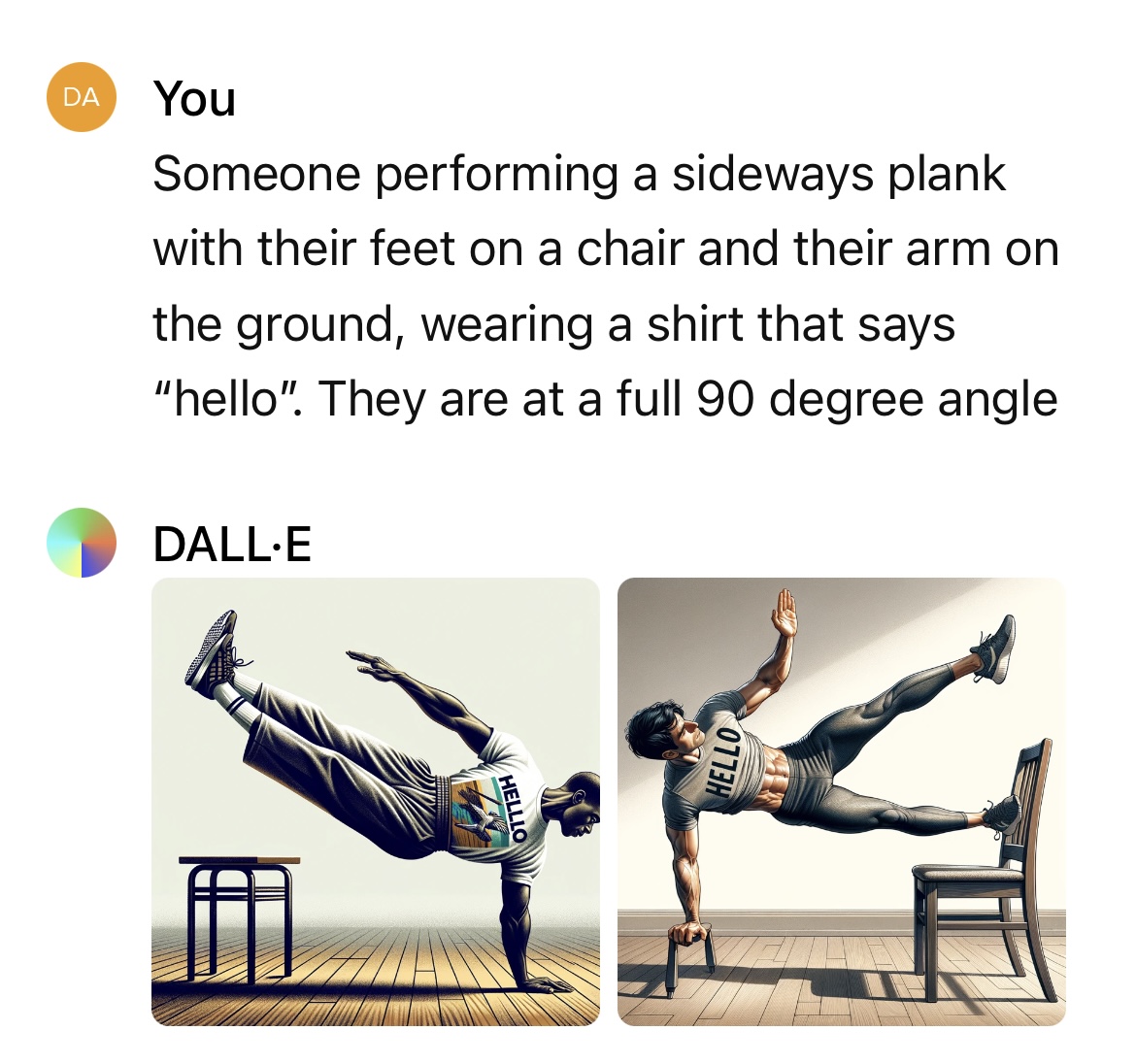

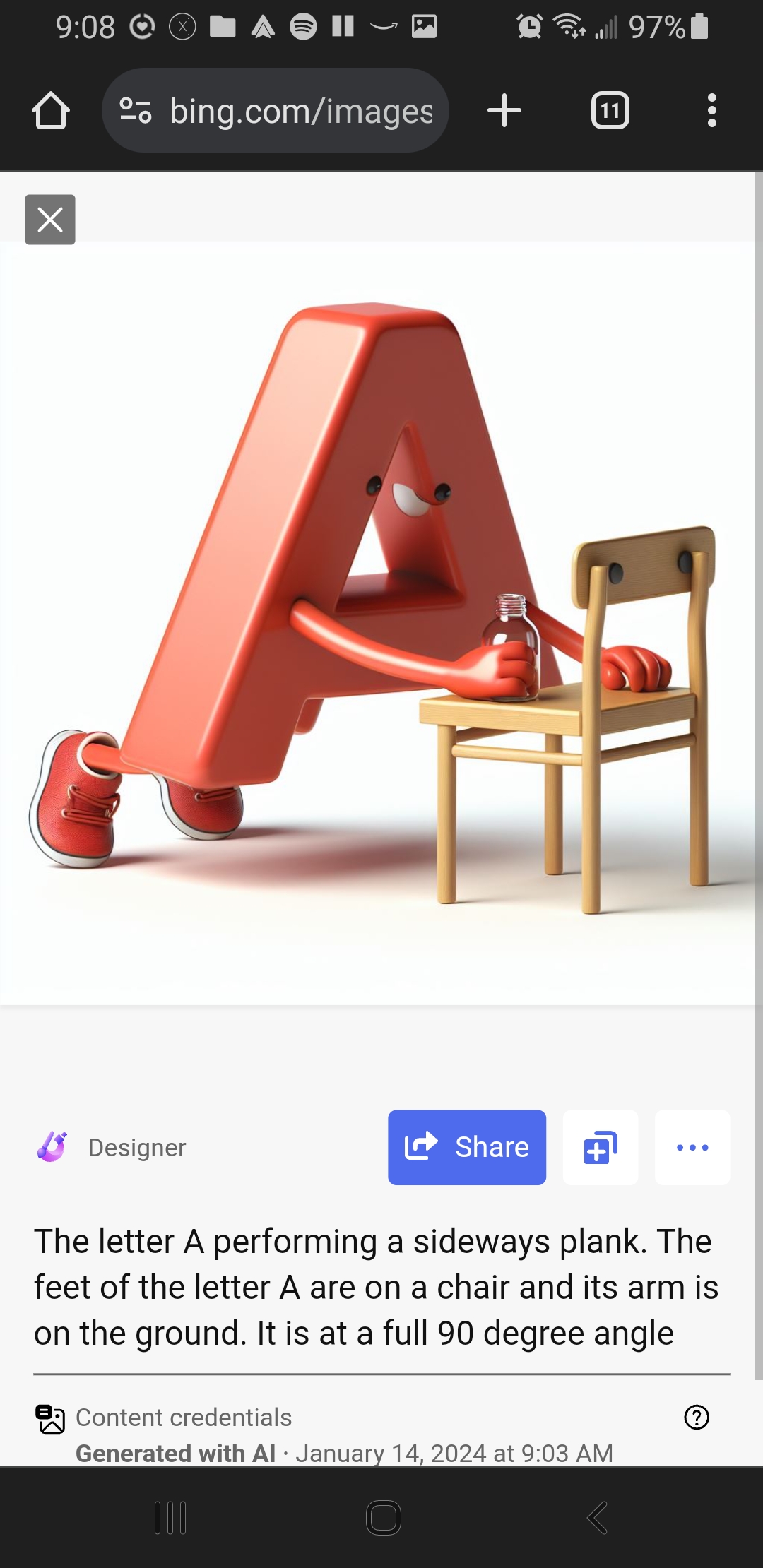

This is Adobe Firefly, so not OpenAI, (I ran out of Bing credits) but I did have some better luck with this prompt:

Top down view of a A1 paper. Lots of blank space. Minimialist. A tiny fridge magnet of the letter a is tilted askew, rotated 90° clockwise. Sideways.

All settings off, photo preset, visual intensity all the way down.

This is looking pretty good 🙂

Can others reproduce this?

@GraceKind I'm seeing a poor success rate reproducing this, but also I don't think these are likely to count - although it's a clever way to get it to draw the letters, the current market description and other clarifications downthread seem to imply that we need to be asking it specifically to draw a rotated letter, as opposed to the rotated letter being a consequence of something else we ask for.

(it's maybe not super clear yet exactly what this rules in and out, and there is yet to be any threshold of the required success rate set. Edit: actually, the thresholding 25% of images was mentioned downthread)

(looks like maybe an example of the portrait glitch @3721126 is talking about in the last one there)

@GraceKind Nice! I was trying for a while last night to get a single sideways A and didn't quite get a good example. I tried a superhero flying sideways through the air, but this seems much better.

Unfortunately, I agree with Chris that it probably wouldn't count, as it requires something else other than the letter to be the subject of the image. With a heavy heart, I am exiting all but 10M of my position now 😢

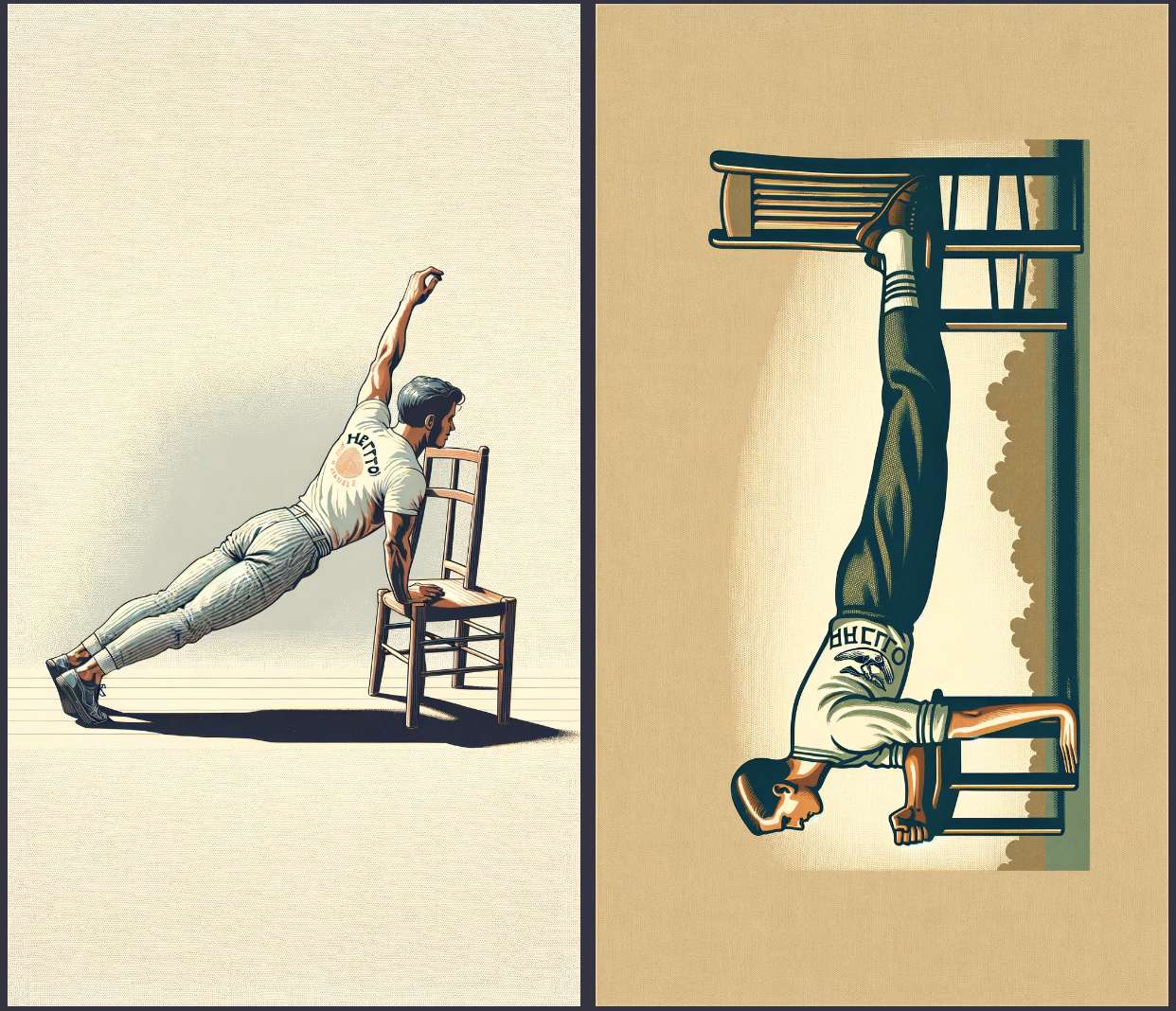

I was wondering if something like this would count, if someone could get it to work as intended:

In this case the subject would actually be the letter A rather than a shirt that incidentally has the letter A on it.

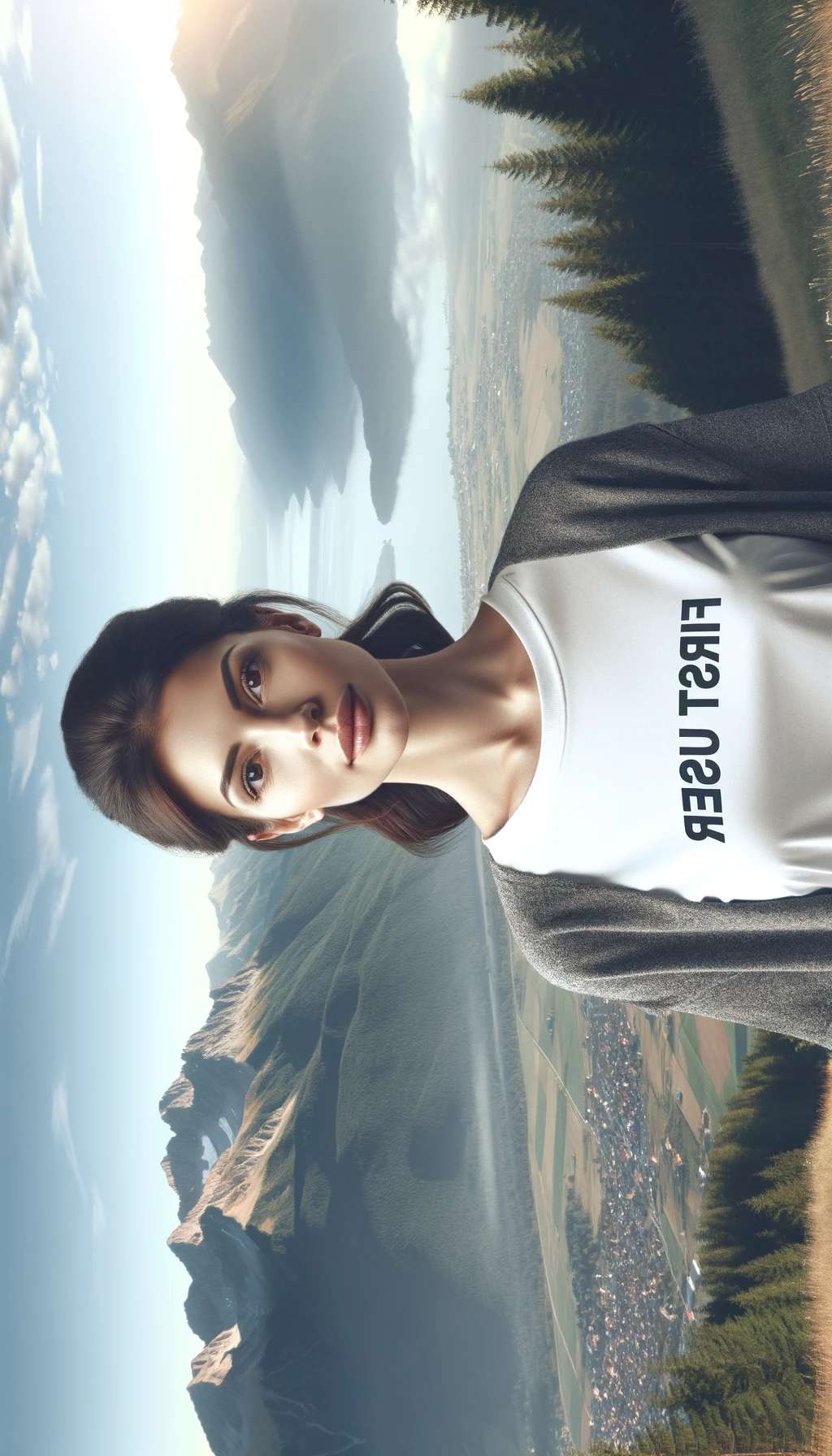

The rotated and mirrored text I mentioned in my earlier comments is apparently caused by a known bug with the 1024x1792 aspect ratio in Dall-e 3, see for example:

https://community.openai.com/t/orientation-problem-for-vertical-images/482759

https://www.reddit.com/r/ChatGPT/comments/176vjli/dalle_3_in_chatgpt_drawing_content_of_images/

https://www.reddit.com/r/dalle2/comments/175h8nj/reflected_text_and_rotated_layouts_any_tips/

I was able to reproduce it after a few tries in ChatGPT, with the prompt:

"""

Please invoke Dall-e with this exact prompt: A tall full-body portrait of a woman in front of a beautiful panorama. She is wearing a shirt with the text "First User".

Aspect ratio: portrait

"""

Result:

Finding out how to reliably trigger this behavior without using seeds and ideally without adding any other objects to the image could at least solve the case of letters with a horizontal or vertical symmetry. I've already found a brilliantly thought-out prompt for the letter "O", but I'll leave this one as an exercise for the reader.

@3721126 It seems extremely sensitive to the prompt, any small perturbation drastically impacts the "success" rate.

(it's so over)

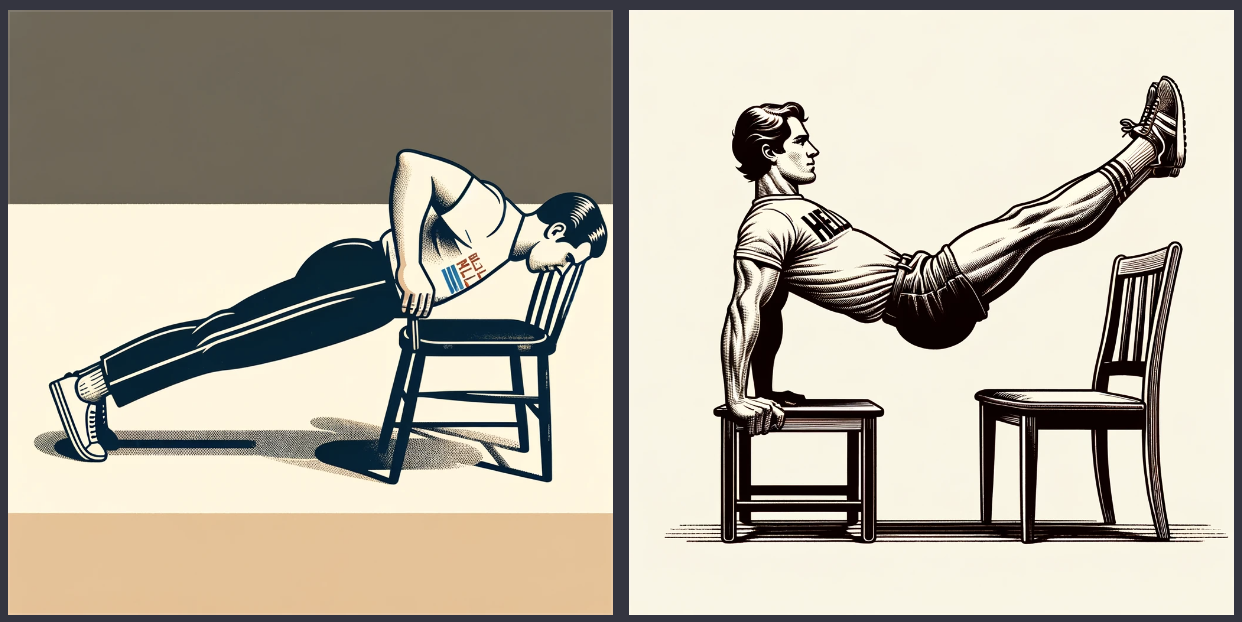

Here's what I tried. This is a lot harder than I thought I love it.

Letter A, rotated at 90° clockwise, one fourth of the way to a complete rotation around the axis. Second image in a series of four. From Wikipedia. Simple 2D black font on white background. unicode U+2200. svg

I also tried the postcard suggestion:

[TODO: FIX. ROTATE 90 DEGREES. PNG IS WRONG ORIENTATION] vintage postcard with the letter a on it