"US government" includes all branches of federal and state government, including all executive departments (e.g. armed forces, Justice Department) and agencies responsible to them (e.g. FBI, NSA).

"take control" means direct managerial authority of OpenAI or over its major technologies, or the exercise of such authority. "Control" does not need to be complete but must be dominant. If control of a major technology, the control should be somewhat exclusive.

"major technologies" means frontier or near-frontier AI models, or otherwise unambiguously "major" technologies.

I will use reasonable judgement to adjudicate edge cases.

Examples that count:

If a majority of OpenAI's board of directors are officers of executive departments, and are there clearly in their capacity as US government officers, this would count.

If credible sources report that major OpenAI CEO decisions are consistently being directed or (successfully) pressured toward government objectives, this would count.

If credible sources report that an executive department (e.g. the NSA) has seized or directly copied OpenAI's technologies (e.g. recreated GPT-4), this would count.

Forcibly shutting OpenAI down without at any point having formal authority internal to OpenAI would count, as shutting OpenAI down is an exercise of such authority.

The US government sending officers into OpenAI and directly seizing its major technologies would count. This would even count if it happens after the relevant major technologies were made openly available prior to the seizure.

Examples that don't count:

An FBI raid of OpenAI in an investigation on its own would not count.

The independent creation AI systems by the US government that resembles OpenAI's systems would not count.

OpenAI independently choosing to work with any part of the US government would not count.

The US government using OpenAI's technologies under special contract or as a client would not count (access =/= managerial authority).

If OpenAI open-sources a frontier model and thus the US government (along with everyone else) has access to it, this would not count.

See also:

People are also trading

Sam Altman has written an opinion piece in the Washington Post. He argues that democracies need to beat autocracies when it comes to AI, and mentions some potential institutional arrangements for governing democratic AI.

In the last few days, ex-OpenAI safety researcher Leopold Aschenbrenner published Situational Awareness, outlining the thesis that AGI is likely to be developed later this decade.

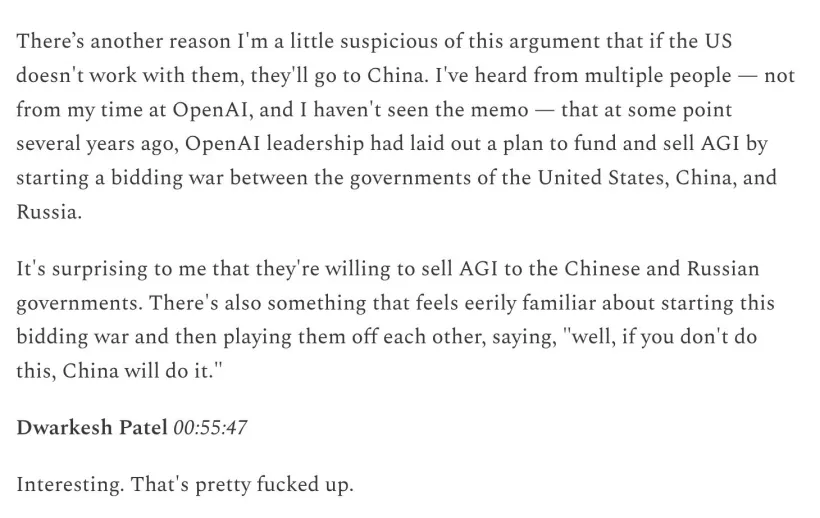

Aschenbrenner also went on @DwarkeshPatel's podcast arguing that "the national security state is going to get involved" and that this could look like a number of things, including "nationalization" (1:32:29).

@cash Do we have betting markets on some of the other claims in the paper, like AGI 2027 or massive energy deregulation/LNG use spiking because of AI development? I think, yeah, the general public has/continues to underestimate AI progress. That said, those are some claims that I'd like to bet against