Inspired by all the people trying to back up their claims of AI risk by saying Turing agreed with them, such as here and here.

"Non-negligible" means that Turing would have supported at least a few thousand person-hours by academics and computer scientists looking into the issue further.

"Exterminate or enslave" will include any serious X-risk or S-risk. Anything that would make humanity much worse off than it is now.

Resolves at some distant point in the future when these issues are less emotionally charged and people are less likely to engage in tribalism and motivated reasoning, and we hopefully have learned more about Turing's life and beliefs.

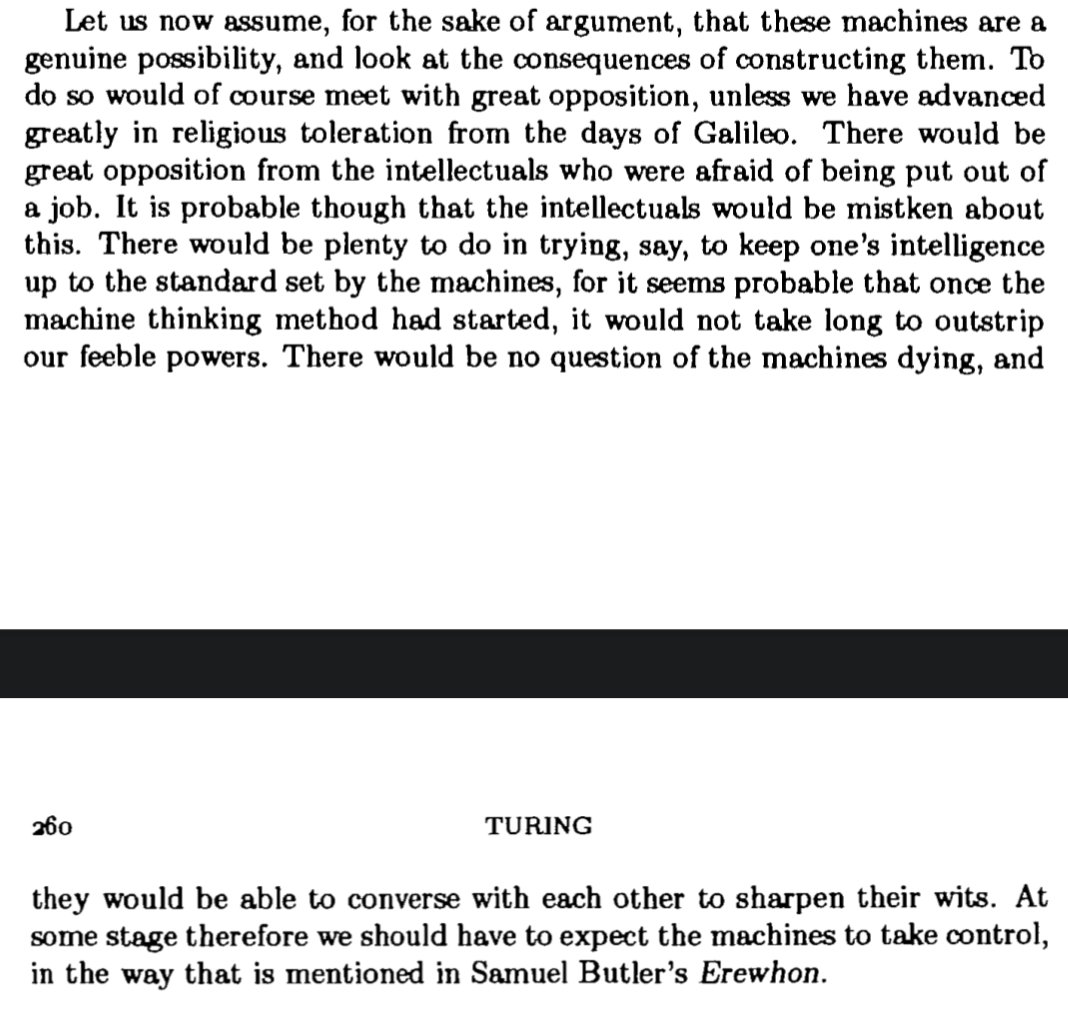

The main two passages people quote:

@IsaacKing work on mechanistic interpretability, arguably motivated by x-risk especially if you consider where the funding comes from (e.g. redwood research) got accepted into major conferences, so I guess at least the reviewers for those conferences did not think that say Chris Olah's time would have been better spent playing the didgeridoo or something. However nips reviewers are not necessarily x-risk critics.

"Non-negligible" means that Turing would have supported at least a few thousand person-hours by academics and computer scientists looking into the issue further.

Would have supported people in his time doing that, or people in our time doing that?

Would have supported if asked, or would have supported after deliberation/argument?

Neither of those passages seem to support the conclusion at all. The closest is when he mentions machines taking control, but that doesn't necessarily mean enslavement. More likely it's just, "humans put machines in control because they run things better than we do."

'Could' and 'Would' are two very different things - IMO his tone implies a possibility but not a strong probability.

Although I have less faith than you do that there will come a point in the future when 'people are less likely to engage in tribalism and motivated reasoning' so it may be a while before we can resolve the market 🤣

@PipFoweraker About this issue in particular, I mean. Once "Is AI risk a pseudoscientific cult that we can dismiss and belittle, or do we have to actually engage with their arguments?" is no longer a pertinent question.