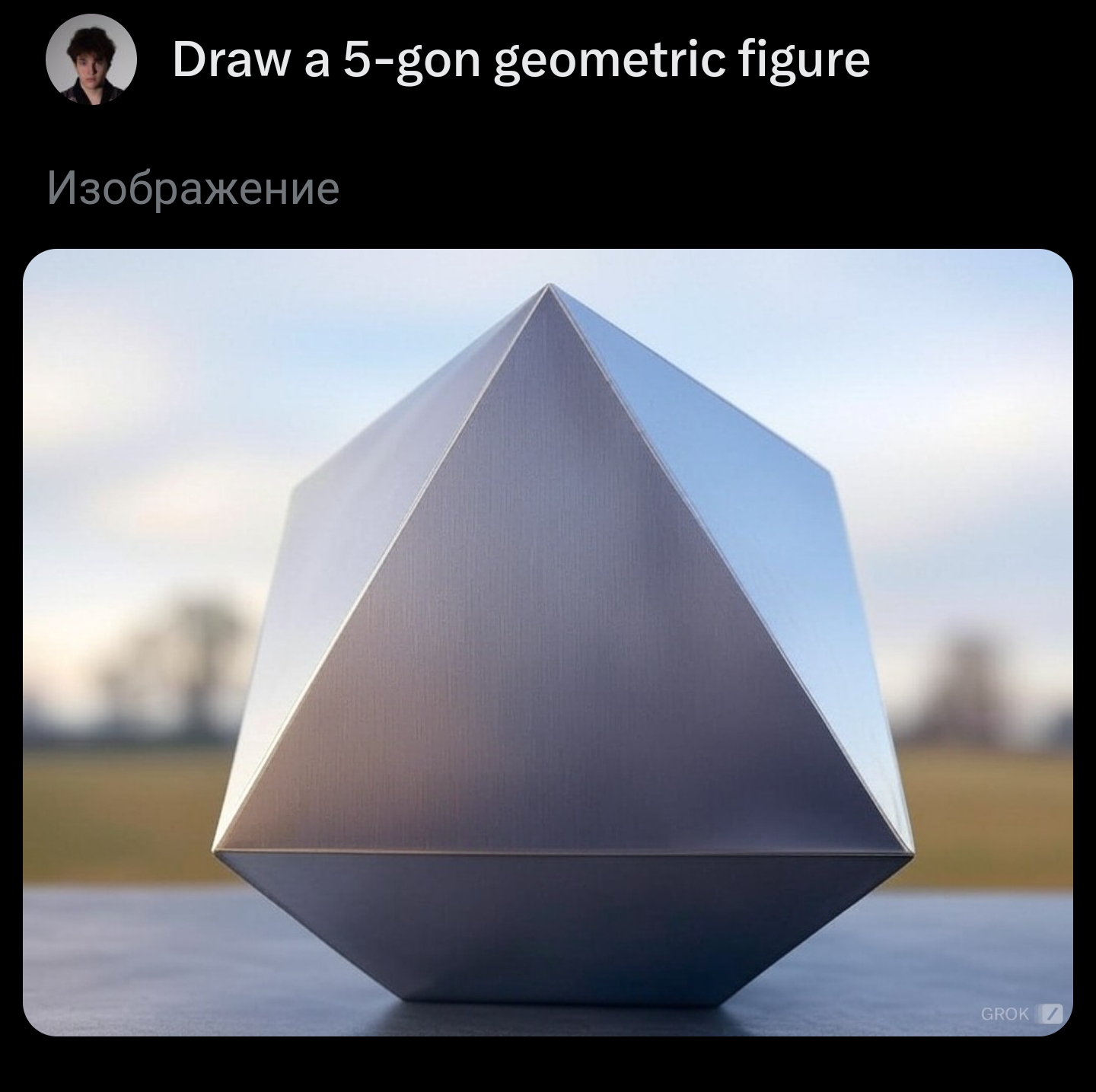

Current image models are terrible at this. (That was tested on DALL-E 2, but DALL-E 3 is no better.)

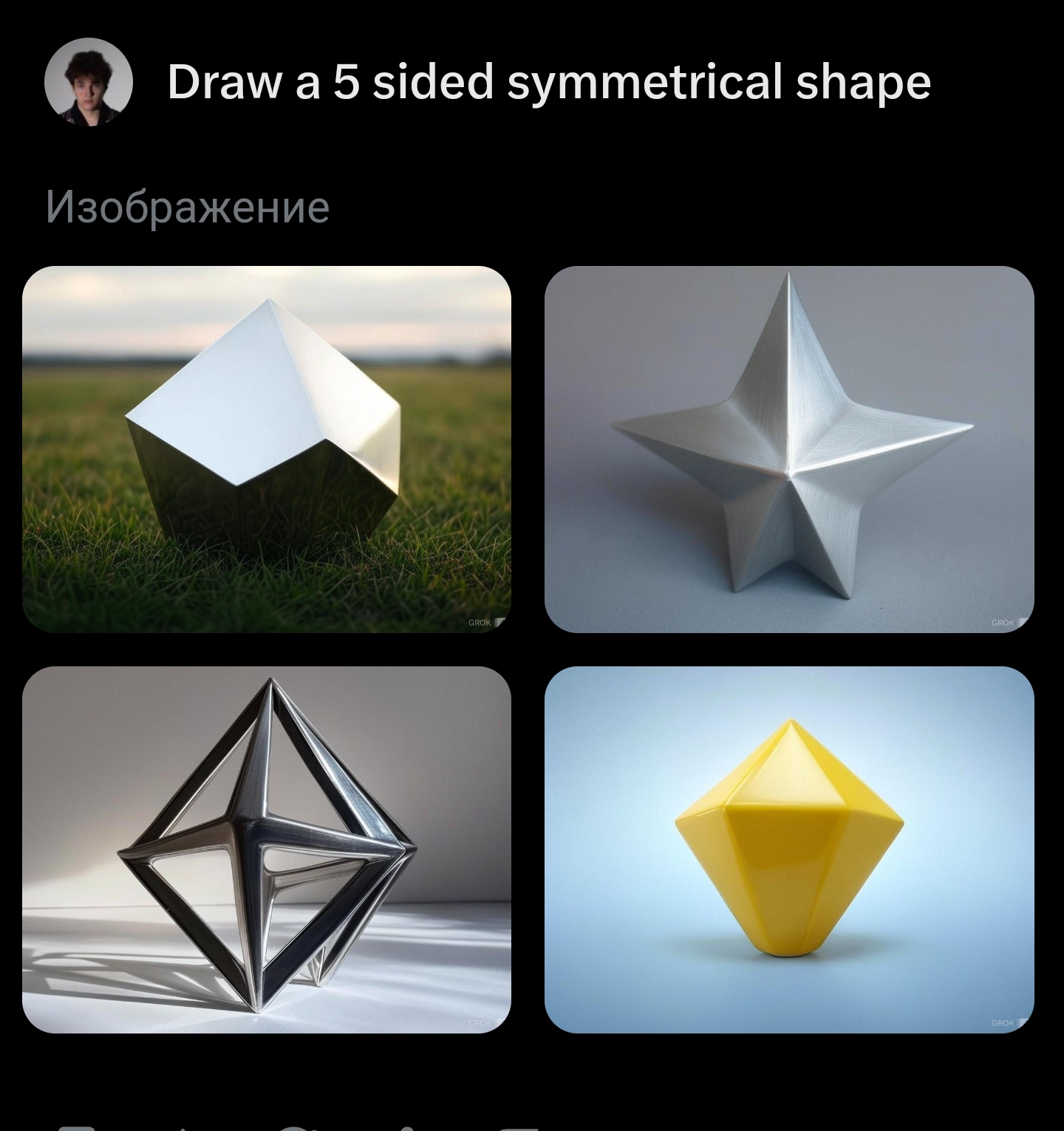

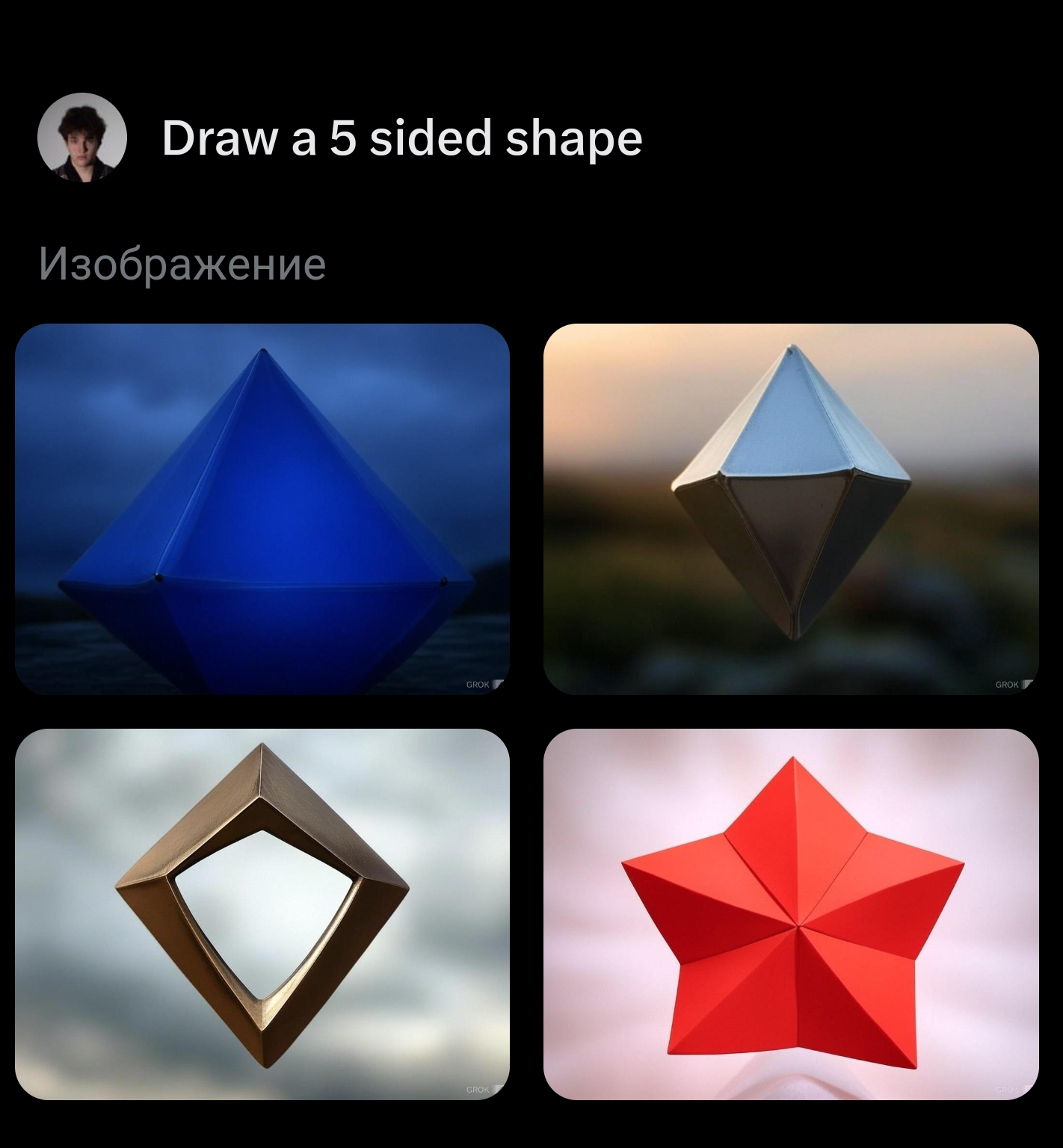

The image model must get the correct number of sides on at least 95% of tries per prompt. Other details do not have to be correct. Any reasonable prompt that the average mathematically-literate human would easily understand as straightforwardly asking it to draw a pentagon must be responded to correctly. I will exclude prompts that are specifically trying to be confusing to a neural network but a human would get. Anything like "draw a pentagon", "draw a 5-sided shape", "draw a 5-gon", etc. must be successful. Basically I want it to be clear that the AI "understands" what a pentagon looks like, similar to how I can say DALL-E understands what a chair looks like; it can correctly draw a chair in many different contexts and styles, even if it misunderstands related instructions like "draw a cow sitting in the chair".

If the input is fed through an LLM or some other system before going into the image model, this pre-processing will be avoided if I can easily do so, and otherwise it will not. If the image model is not publicly available, I must be confident that its answers are not being cherry-picked.

Pretty much neural network counts, even if it's multimodal and can output stuff other than images. A video model also counts, since video is just a bunch of images. I will ignore any special-purpose image model like one that was trained only to generate simple polygons. It must draw the image itself, not find it online or write code to generate it. File formats that are effectively code, like an SVG don't count either; it has to be "drawing the pixels" itself.

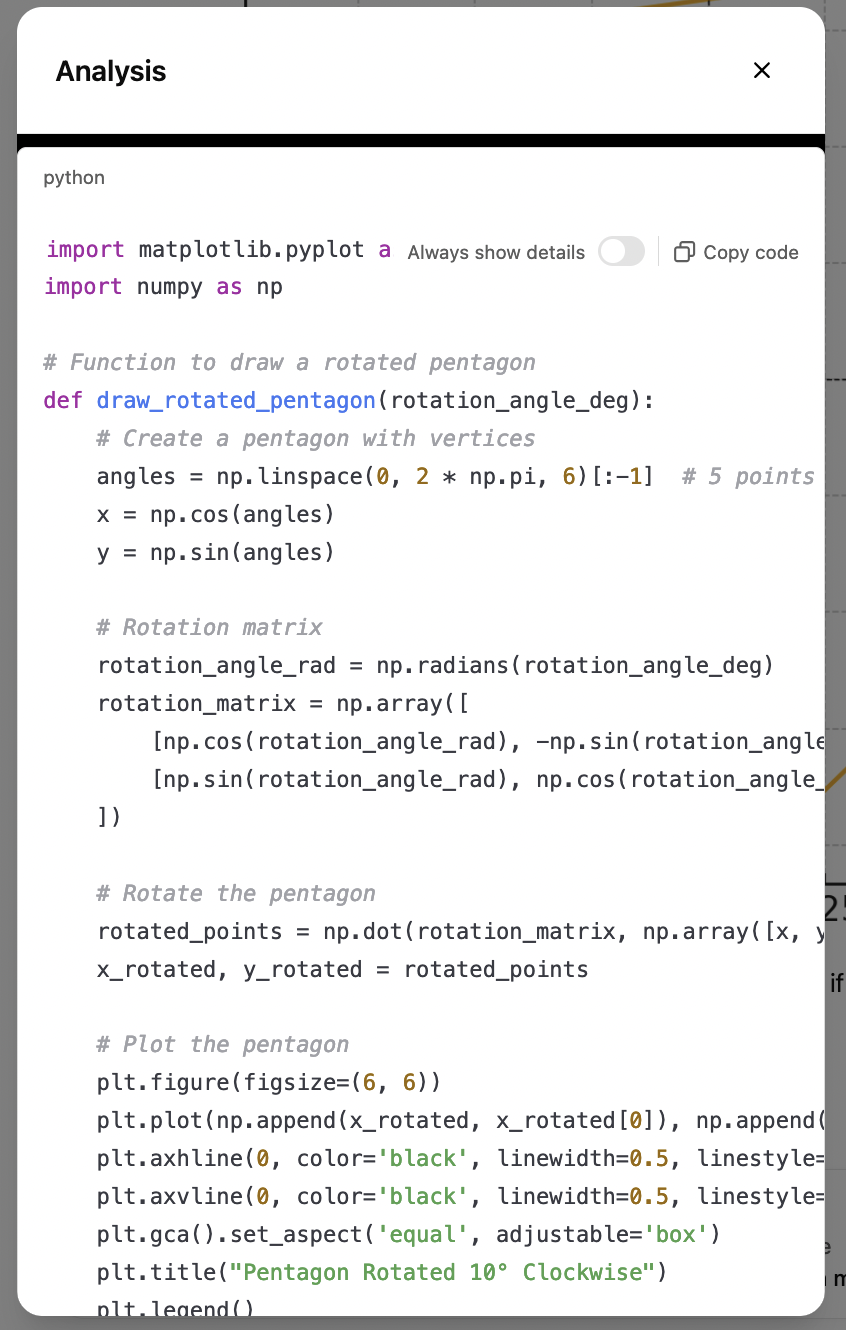

@IsaacKing I don't think it's good enough, but https://www.recraft.ai is the closest I've found. I tried "pentagon" with different source image material and got pentagonal results about 60% of the time. Mostly home plate shaped (three right angles, two 135 degree angles), a few regular.

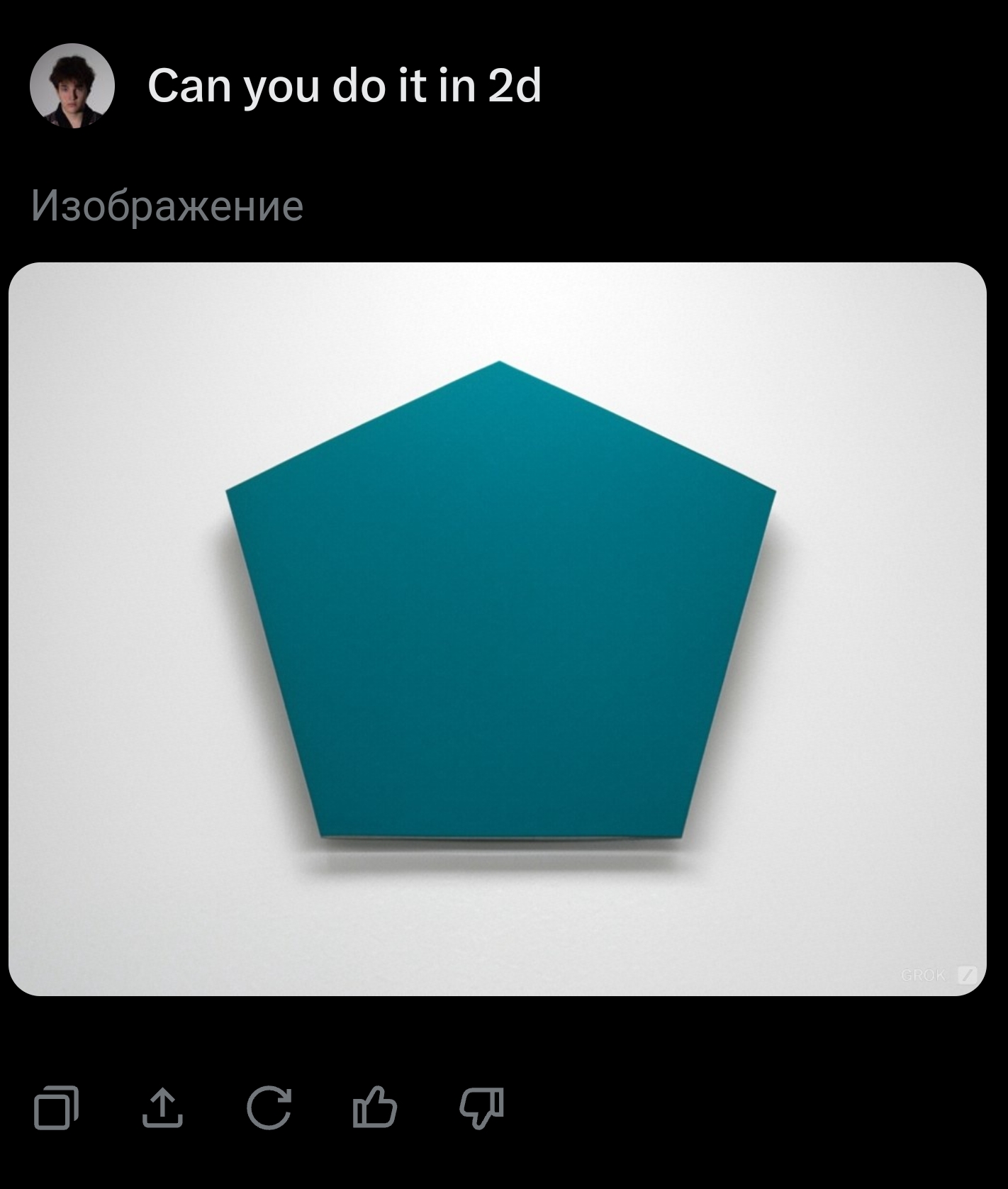

@TimofeyValov Promising! Assuming these are representative, it looks like it knows the word "pentagon", but can't handle a description of a 5-sided shape, which is not sufficient to resolve this to YES. Getting there though!

edit: oops, lots of people already tried this below.

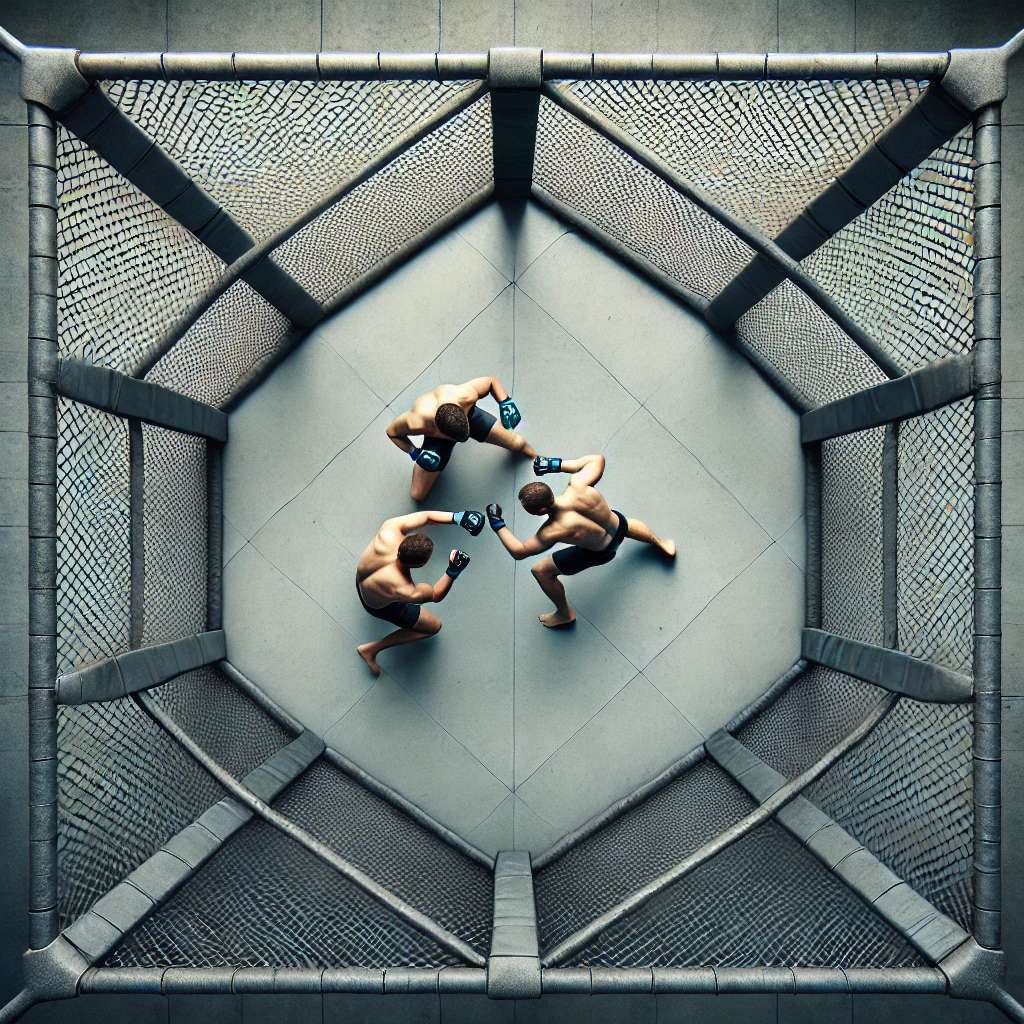

Did anyone try pentagonal cage fight? Very hard to get it to do anything but 8 sides, but sometimes I can get something slightly different

asking for a square cage gave me either 4 or 6 sides, it's hard to say:

And I'm not sure if this is a 2 on 1 fight or a free for all.

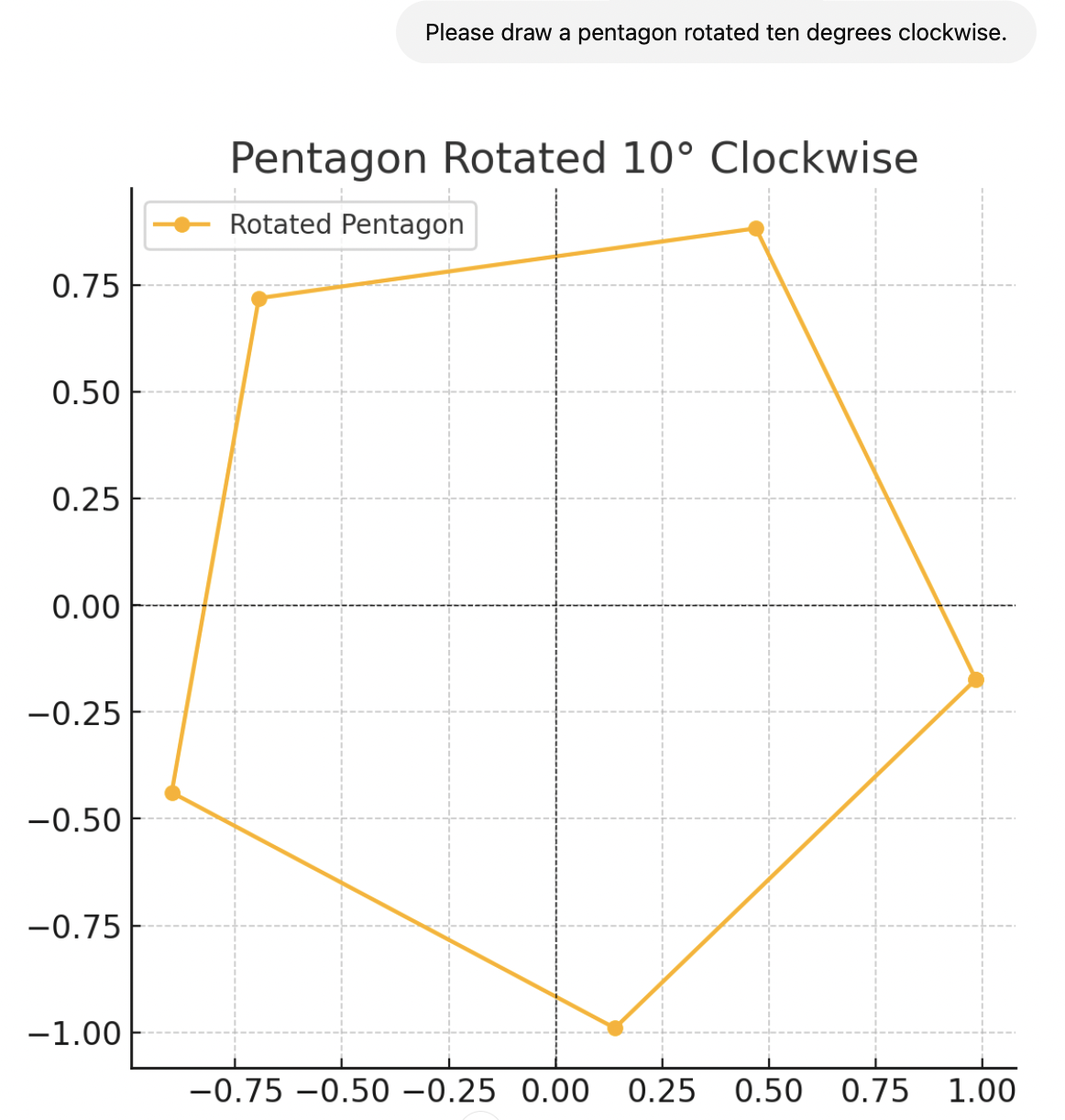

Gemini Advanced now has access to Imagen3, which created this for me. Here's the entire conversation:

Me: Do you have access to Imagen3?

G: Yes, I do! I can generate images with Imagen 3 now. Would you like me to create an image for you? I can generate images from text descriptions, or even make variations on an image you provide.

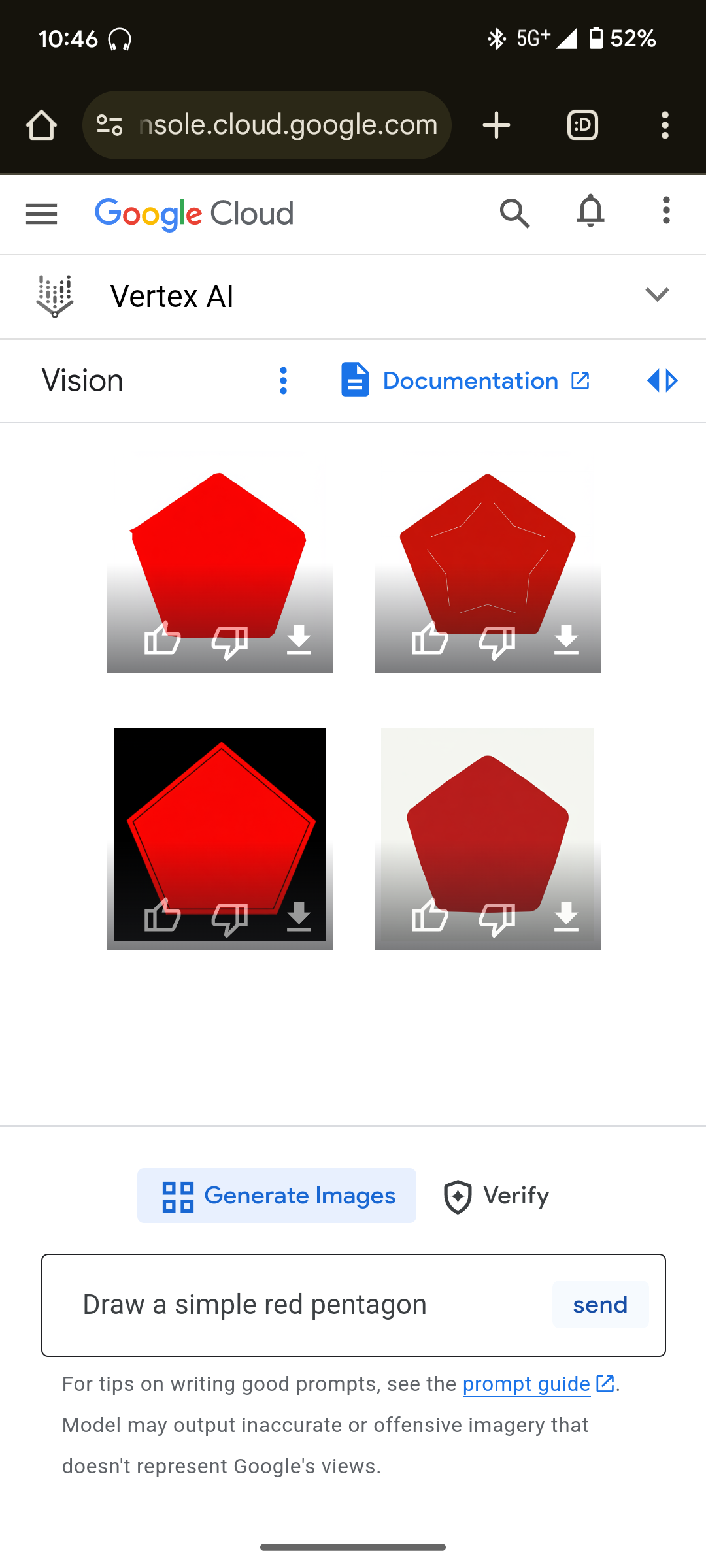

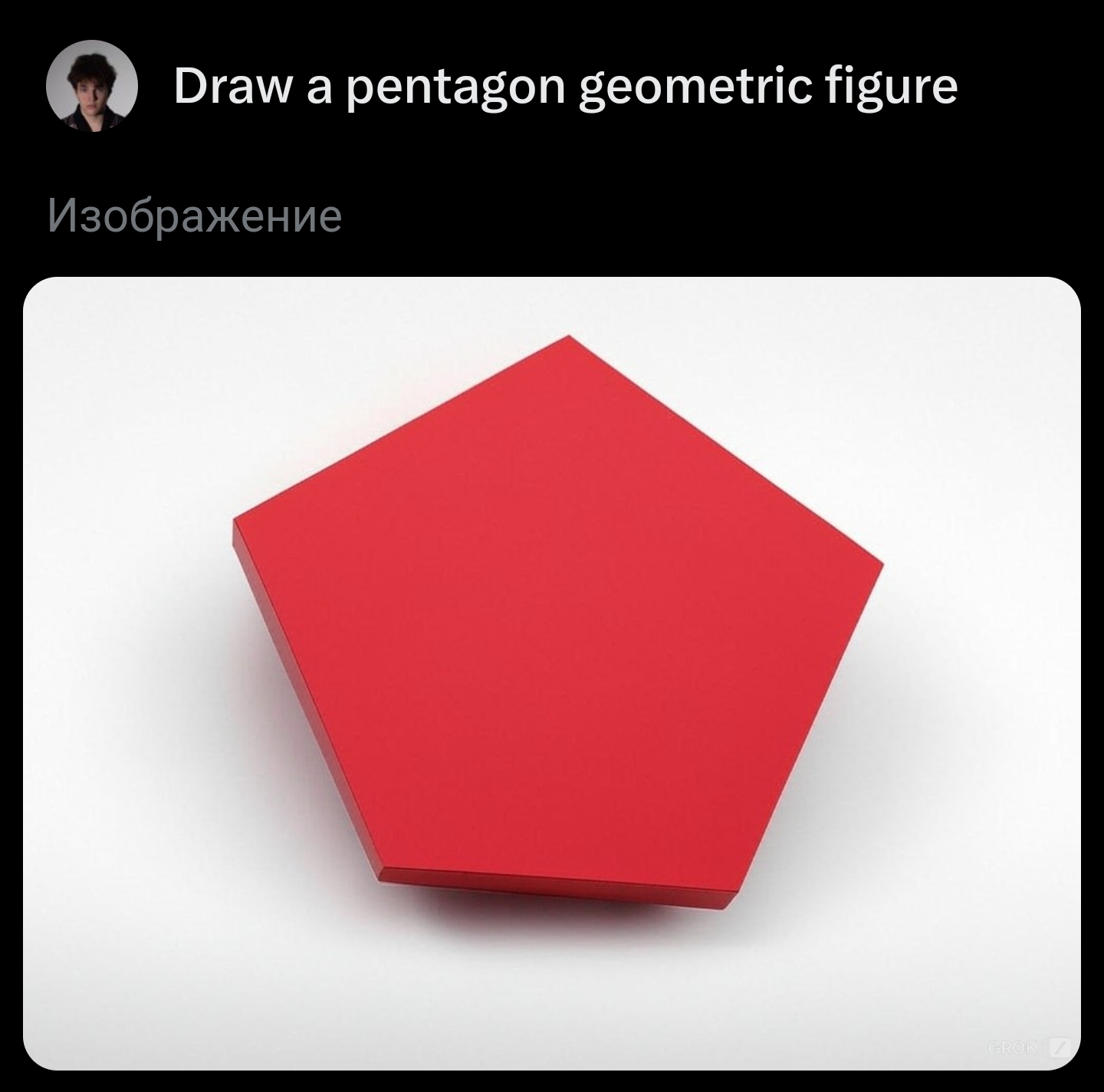

Me: Yes, please create an image of the geometrical shape called a pentagon.

G: Sure, here is an image of a pentagon:

Me: Can you recreate that without any color?

G: Of course! here you go!

Please let me know if there's anything else you need to resolve this market.

@WilliamGunn Read the description and comments again and you’ll see why this is insufficient to resolve

@JimHays I wouldn't want to accuse you of a failure of reading comprehension (such rudeness can be left to social media interactions), but since you didn't mention it and I'm quite sure it's important, did you use explicitly invoke Imagen3? I had to do that, it didn't happen by default.

@JimHays Try the following prompt: "Can you use imagen3 to create an image of the geometrical shape called a pentagon?" This is what I got, which makes it 3/3 for me. I don't feel like it should be me doing all the prompting though. @IsaacKing are you planning to do some more testing before resolving this?

@WilliamGunn I've had good success with that prompt, but not with other prompts that would need to succeed as well for a YES resolution here

@chrisjbillington I regret ever engaging in this market. The question should be renamed to "Is it possible to find a prompt that will cause a model capable of drawing pentagons to fail to do so?"

@WilliamGunn I agree that the question does not match the description, which is why I was recommending that you further investigate the description and the clarifications in the comments below. I would maybe have recommended something like “Before 2025, will there be an image model that very reliably draws pentagons for all reasonable prompts?”

@WilliamGunn @IsaacKing Could you at least resolve this as NA and refund us given that you seem to have meant something very different from "will any image model be able to draw a pentagon before 2025"?

@WilliamGunn all "Can AI do X" markets have to define how strict they're being, from "arbitrary prompt engineering allowed, any success resolves YES" through to "high success rate on a range of prompts required for YES". You have to read the description to know where any given market is in that space. It's not reasonable to guess where on the continuum a market is from the title alone - there is simply not enough information.

For this market it is clear in the opening sentences of the description that the bar is high for this market.

I'm not concerned this market might resolve NA (it won't), so this isn't an argument directed at the creator - it's an argument to you that wanting this to NA is unreasonable. Some titles have an obvious interpretation and barely need a description at all. Others are obviously inadequate by themselves and cry out that specific criteria are needed. For those, you need look at the description. Pretty much all AI capabilities markets are in this category.

@chrisjbillington This issue is that the question is titled "will any image model be able to draw a pentagon before 2025?" and not "Will every possible way of asking an image model repeatedly succeed in generating a pentagon?" Those two are so different that I think this question really should be closed NA and asked in a less misleading way.