Just based on my subjective opinion, will any foundational model (e.g. a top AI for general reasoning), at the end of next year, be able to consistently produce good songs that make the songs currently generated by services like Suno, Udio, and ElevenLabs seem like an inferior product? (The musical prowess of future music-specialist AIs is irrelevant to this question.)

Current music-generating models have a variety of flaws, such as muddy vocals, inconsistent lyrical flow, and weak outros. Cherry-picking helps a lot, but I still prefer human music. I can imagine a future where next-gen versions of Gemini, Llama, etc. are able to outclass current music specialists by virtue of scale. GPT-4o can already sing (albeit poorly). But I can also imagine that no frontier lab finds it worth training their multimodal generalist on music and thus the specialist-AIs remain >18 months ahead. Help me see the future! 🔮

I won't bet.

People are also trading

I actually think that the future of music generation might be setting o3 to work for a day on a computer with audio editing and speech generation tools installed on it.

While the music models are beyond human level now, they don't allow the level of control that inputting notes manually does, and that might be the missing piece of the puzzle to get to superintelligent music.

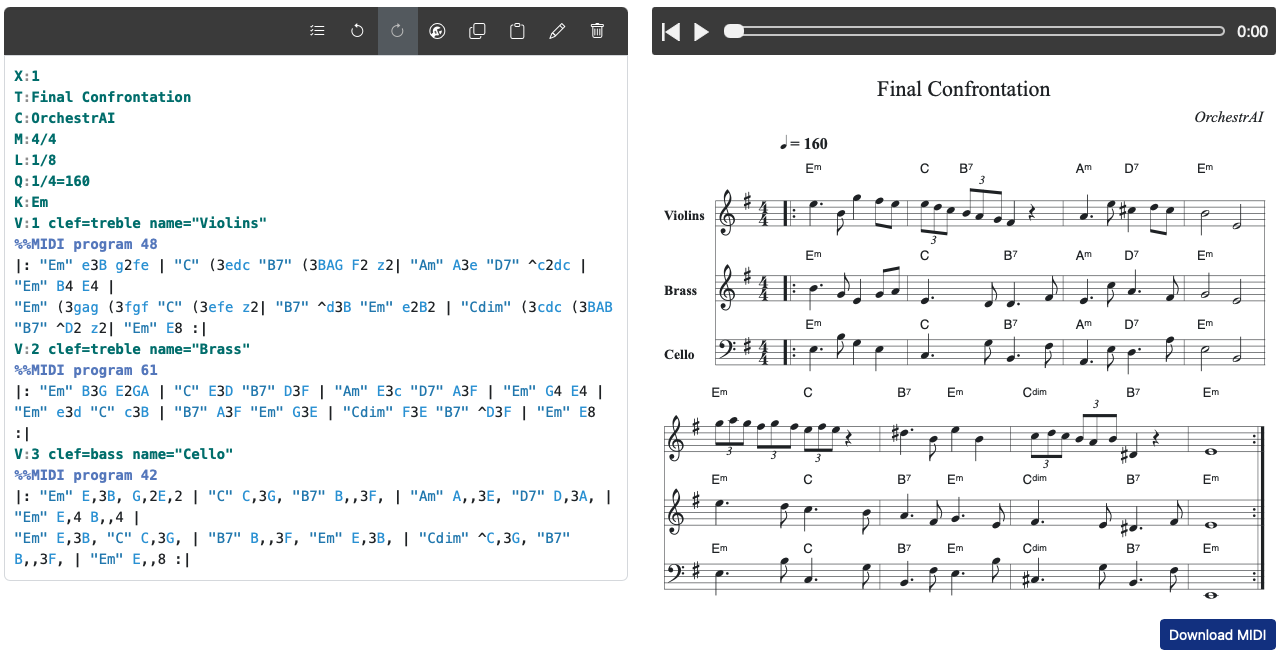

My LLM to symbolic music project is super relevant to this. If you want to try GPT, Claude, etc and be able to play/view what they produce, you can do so with a free account - https://www.orchestrai.site

@Nat Good question. I'd say that it has to be integrated into the general model such that AFAICT the general model's internal representation (e.g. the residual stream in a transformer) is involved in crafting the music, rather than the general model merely prompting a sub-AI. GPT-4o is integrated. Dall-E is not.

For reference, here are a few of my top picks of AI generated songs solely selecting for generic quality:

@MaxHarms If a generalist AI in January 2026 can consistently produce songs this good without cherry-picking (or better songs with cherry-picking) I will resolve YES.