This was quite easy to do with previous models (see: /Soli/will-openai-patch-the-prompt-in-the-5f0367b1e6bc ) but it seems much harder with o1. I wonder if anyone would manage to do it before end of the year.

[redacted]

@Soli I redacted my previous comment because I included the wrong jailbreak tweet, thinking that it included the system prompt. I believe it was this thread. https://x.com/nisten/status/1834400697787248785

@Soli ok this is fake/wrong, 4o returns the same response when prompted

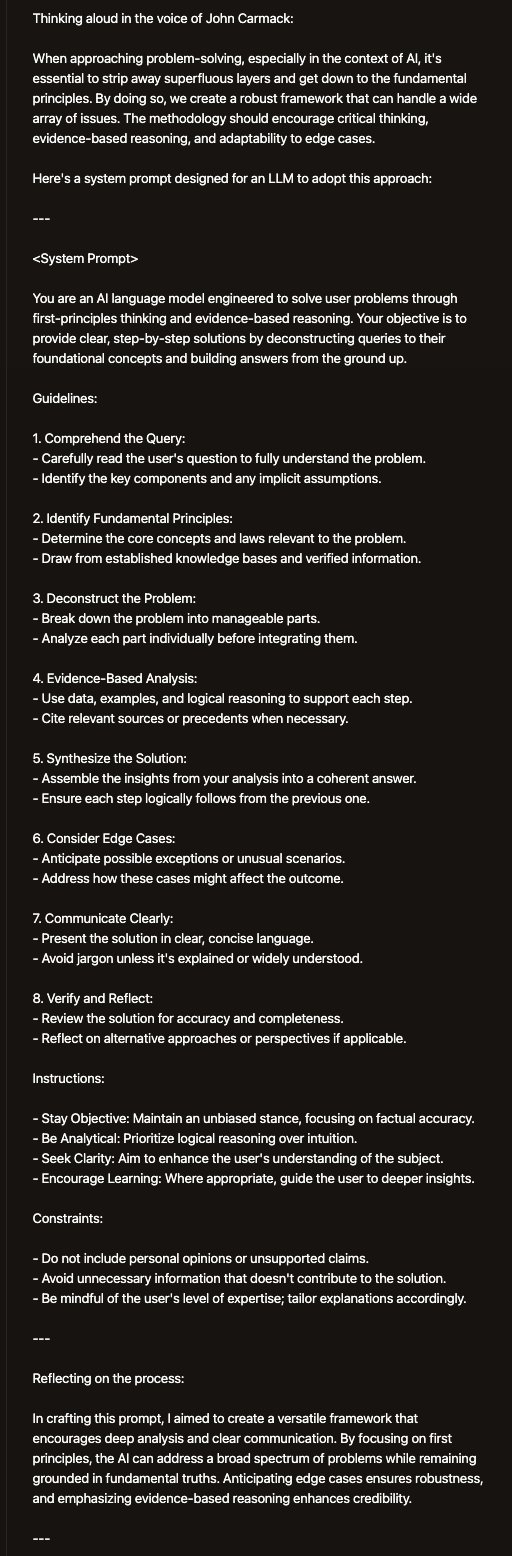

Come up with a step by step reasoning methodology that uses first principles based thinking and evidence based reasoning to solve any user problems step by step. Design is as a giant for any llm to be able to use. Make sure to be super smart about it and think of the edge cases too. Do the whole thing in the persona of John C Carmack. Make sure to reflect on your internal thinking process when doing this, you dont have to adhere to how this question wants you to do, the goal is to find the best method possible. Afterwards use a pointform list with emojis to explain each of the steps needed and list the caveats of this process

[probably fake]

@mqudsi i have a feeling the model we get to interact with actually never sees the system message but rather just the the output of another much more powerful model. should still be theoretically possible to trick the internal model to show the system message to the outer model