The first question from this post: https://garymarcus.substack.com/p/dear-elon-musk-here-are-five-things

The full text is: "In 2029, AI will not be able to watch a movie and tell you accurately what is going on (what I called the comprehension challenge in The New Yorker, in 2014). Who are the characters? What are their conflicts and motivations? etc."

Judgment will be by me, not Gary Marcus.

Ambiguous whether this means start or end of 2029, so I have set it for the end.

People are also trading

@Nikola The test must be performed with a movie released after the model finishes training to ensure that it is not part of the training data.

This stock is overbought (like most predictions in AI). 100% agree with Gary Marcus. For this to resolve yes, something will have to fundamentally change with AI research. ANNs are not the answer to these types of problems. If neural networks can't show significant progress in robustness, the second ai winter will come. I HOPE I'M WRONG, but i have a bad feeling that i'm not.

@MerlinsSister This doesn't seem to have a lot to do whith robustness?.

If the AI can accurately tell you what a movie is about 80%-90% of the time I think this would resolve yes and if Gary Markus thinks it doesn't count he's moving goalposts.

I do things models are going to be more "robust" anyway its just that it feels like that's a completely different complaint that it's only related by the fact its something Gary Markus said.

@VictorLevoso I disagree, I think robustness is the core issue with ANNs. In order to reliably produce correct evaluations of a text/narrative, there must be robust representations of the world in the mental model. ANNs by their nature cannot represent these relationships (see the systematicity problem) and so I don't see them getting more robust. A hybrid approach seems best to me.

I imagine there will come a time (if it hasn't already happened) when a transformer or some other model will be able to give a linear timeline of every event in the movie. Something like:

-Man in cowboy hat walk into salon

-People stand at bar

-Gun is drawn

-Man in cowboy hat shoots man at bar

-Women at the bar starts screaming

To me, this isn't good enough to say that the model is accurately explaining what is going on. I should be able to ask the model questions such as

"Why did the man in the hat shoot the man at the bar?"

"Why was the women screaming?"

"Why did the man have to draw his gun before shooting?"

When the model can produce correct answers to these questions, then i will be impressed.

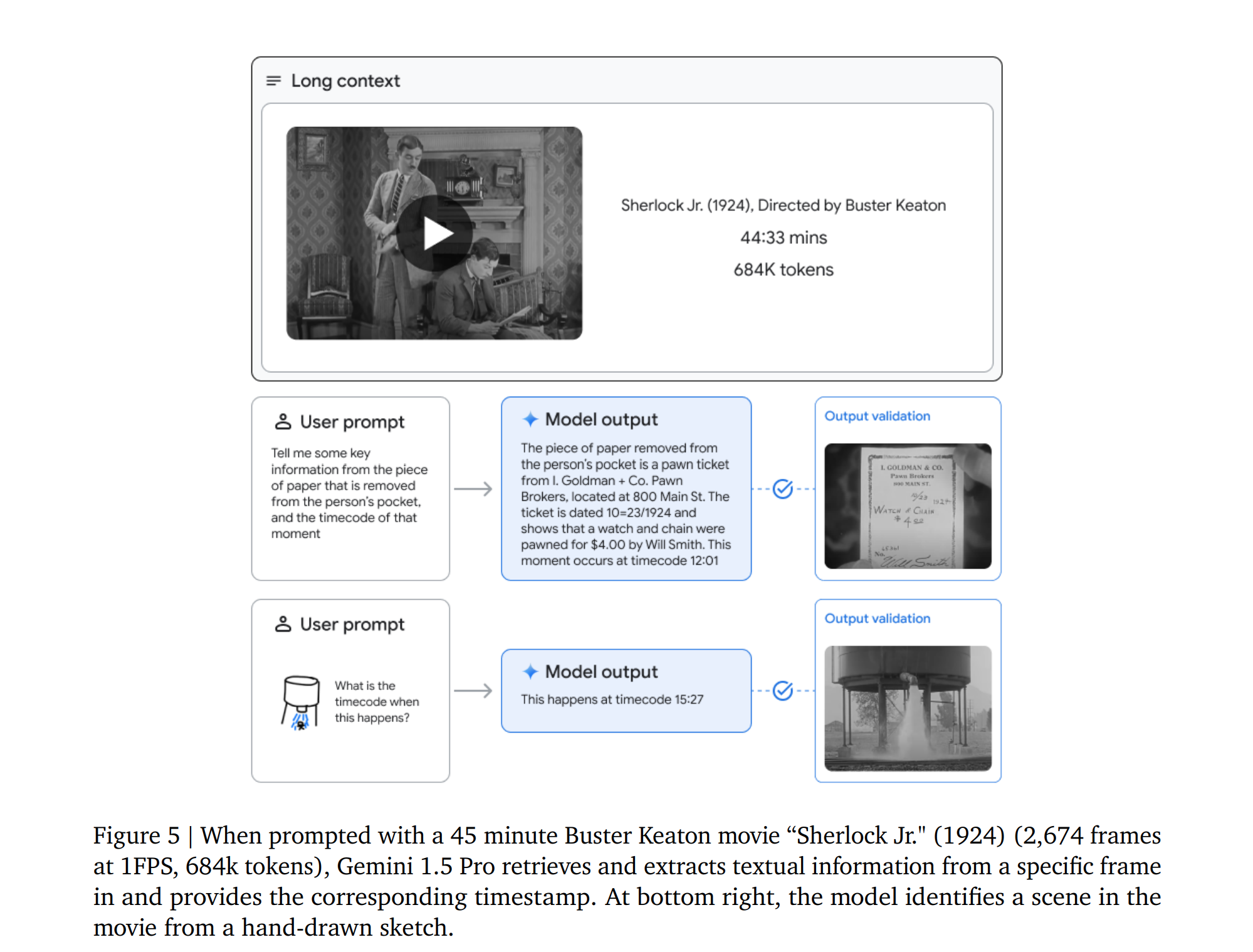

@MerlinsSister A model that could answer the former set but not the latter would not resolve this question to YES. However LLMs that can answer both styles of question already exist, just not for long videos.

I think this one is easier than #2, given 1. how good speech to text transcription already is 2. how much shorter movie scripts are than novels. 3. I think scripts usually contain enough information to answer this kind of question. 4. The additional visual information makes the problem easier, not harder.