Context: https://x.com/Simeon_Cps/status/1706078819617063304?s=20

Resolves YES if it's revealed that OpenAI had achieved AGI internally before the creation of this market.

I'll use OpenAI's AGI definition: "By AGI, we mean highly autonomous systems that outperform humans at most economically valuable work.".

Related questions

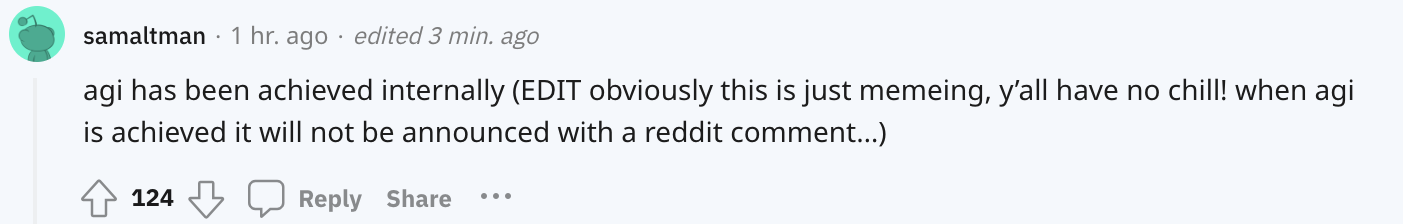

No, this market should not resolve. If the market was 'will someone else at OpenAI make any public statement that implies this' it was whether it actually happened. I still find it highly, highly unlikely that any such thing happened. And if it did, the last thing I care about is losing a little bit of manna, I wouldn't be wasting time writing this comment.

@ZviMowshowitz Hi Zvi, I was just testing if there was a bot predicting automatically based on recent comments. I expected there to be one but it seems like nothing happened.

@MrLuke255 I'll use OpenAi's definition: "By AGI, we mean highly autonomous systems that outperform humans at most economically valuable work."

@firstuserhere Correct. I'm willing to leave it up to a vote if there is sufficient debate or if OpenAI change their definition of AGI.

@Alfie Imo in the unlikely case where they do have powerful AI, they probably haven't hooked it up to any robots or anything. Though a generally better reasoner should in principal be able to do better than existing LLMs hooked up to robots, but do we have general purpose enough robots to do construction reasonably?

So I think if we just directly follow that definition then there would be a bunch of cases where it would obviously be a significantly good reasoner that wouldn't count..