To be judged as an LLM made by a Chinese organization that ties or surpasses the leading LLM by OpenAI, Anthropic, or Google on the leaderboard here.

Additional Information

China is significantly investing in the development of large language models (LLMs) and is home to many AI-oriented companies and LLM applications. Chinese tech giants like Baidu, Alibaba, Tencent, and SenseTime have already released their GAI products, and the Chinese government aims to be an AI leader by the 2030s. While there isn't direct information indicating whether China will surpass or match OpenAI, Anthropic, and Google by the end of 2024, given the substantial investments and efforts, it's plausible that China will remain competitive in the LLM race.

Concurrently, the progress and development of OpenAI, Anthropic, and Google in the LLM race have been rapid. OpenAI led the initial LLM boom with its GPT-3 model, Anthropic is focusing on making LLMs more transparent, safe, and beneficial, and Google's Pathways AI model has surpassed GPT-3 in terms of parameters. This suggests that the race in LLM development continues to be highly competitive.

Some Background From The Web

Will China be competitive in the LLM race compared to OpenAI, Anthropic, and Google by end of 2024?

AISupremacy

TechWireAsia

What are China's current advancements and investments in the field of LLM?

Shanghaiist

TechWireAsia

What is the pace of progress and development of OpenAI, Anthropic, and Google in the LLM race?

LinkedIn

Medium

Related questions

quant ppl are good at data cleaning

Curious as to why people are going bullish in this market.

It's likely that if the US starts to feel threatened by China in the AI race they will start restricting the flow of compute power into China which is a crucial component of AI. The US is ahead by a large factor in terms of graphics cards R&D - think NVIDIA and AMD.

@idk Well, first, Chinese companies were successful building frontier models with only H800s. Also, the Ascend GPU from Huawei are starting to show some success.

The fact that Qwen trained a good 72B model tells us that as soon as they change from dense to MoE which uses the same about of computing power, they should get 4t level model. This already happened to Yi-Large.

@SimranRahman Qwen2 72b probably will fall short of Claude Opus, but imagine Alibaba uses the same technology and scale to a proprietary MoE with 72B active paramter and 400B+ total parameters. Easily > Opus

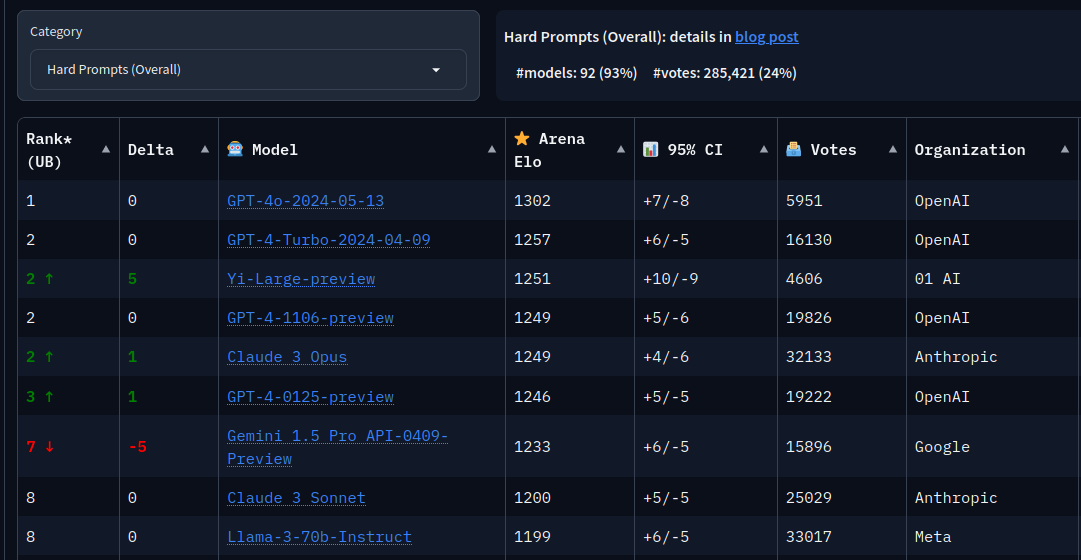

in the "hard prompt" category a chinese model is already competitive (not the general arena which I assume the resolution hinges on)

https://lmsys.org/blog/2024-05-17-category-hard/

(the votecount is low, maybe it will change but good news for the YES holders)

@Ledger If a Chinese model surpasses Google's model, but not Anthropic's or OpenAI's, does this resolve YES?

@JonathanRay tho the code is probably unusable outside google because they use their own special snowflake infrastructure for everything. A lot of it would have to be rewritten

@JonathanRay Please look into how much compute, experimental runs and cleaned data is needed, and how hard building a large language model at the scale of Opus, GPT4, Gemini is (it's a lot, and it's very hard). Nevertheless I believe that by EOTY 2024 best Chinese ML researchers can pull that off, their Qwen 1.5 line is quite good. It's not as simple as get training code -> train.

@MADGAMBLER6969 Stealing the code and having the government pay for a zillion GPUs would go a long way

@JonathanRay efficient training code is architecture specific (what interconnects you have, what makes the calculations), most models right now are on the neox/llama base and those codebases are open source, saying that China steals tech is generally true but there isn't anything to steal in LLMs, it seems like there are very few secrets in how to make a good model, it's just very difficult and needs scale (but scale alone is not enough). Zillion GPUs is also a fantasy because there are more important places for compute (that today costs premium because of the bans on imports of advanced silicon) to be allocated in China, still some labs (Alibaba and Sensetime are main ones) have compute to participate in that dickwaving contest and I think they will overcome Google (they have a pretty bad track record for being leaders in cutting edge tech).

@Sss19971997 @Ledger wrote "So as soon as Alibaba shows up in the organization column before the first appearance of OpenAI, Google, or Anthropic, then this will resolve yes."

"as soon as" implies that it resolves YES if a Chinese model surpasses one of the others at any point from now until EOY 24.